Kubernetes二进制集群扩容node节点

二进制k8s集群扩容node节点

扩容node节点分为两步,第一步先将我们旧的node节点上的配置先去拷贝到我们新的节点上,第二点就是将我们的容器网络环境打通

这里我是直接扩容两个node节点。

一、前置准备

1.系统优化

之前在部署集群的时候对系统环境的优化以及配置需要在新加的节点也执行一次,务必保证集群所有节点环境一致

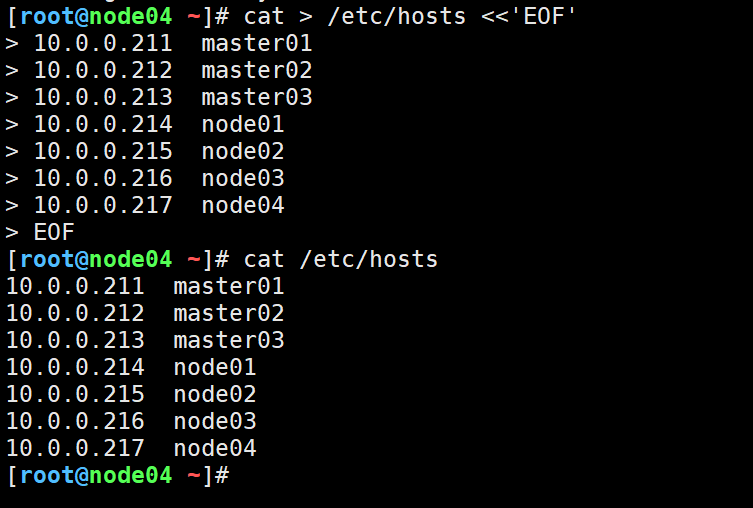

(1)主机名配置

#配置解析

cat > /etc/hosts <<'EOF'

10.0.0.211 master01

10.0.0.212 master02

10.0.0.213 master03

10.0.0.214 node01

10.0.0.215 node02

10.0.0.216 node03

10.0.0.217 node04

EOF

#master01执行

ssh-copy-id -i ~/.ssh/id_rsa.pub root@node03

ssh-copy-id -i ~/.ssh/id_rsa.pub root@node04

(2)新加节点修改yum源

新加节点CentOS 7安装yum源如下:

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

curl -o /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

(3)新加节点安装常用软件

yum -y install bind-utils expect rsync wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git ntpdate

2.基础优化

(1)新加节点关闭firewalld,selinux,NetworkManager

systemctl disable --now firewalld

systemctl disable --now NetworkManager

setenforce 0

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

(2)新加节点关闭swap分区,fstab注释swap

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

free -h

(3)新加节点同步时间

手动同步时区和时间

ln -svf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

ntpdate ntp.aliyun.com

定期任务同步("crontab -e")

*/5 * * * * /usr/sbin/ntpdate ntp.aliyun.com

(4)新加节点配置limit

cat >> /etc/security/limits.conf <<'EOF'

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

EOF

(5)新加节点优化sshd服务

sed -i 's@#UseDNS yes@UseDNS no@g' /etc/ssh/sshd_config

sed -i 's@^GSSAPIAuthentication yes@GSSAPIAuthentication no@g' /etc/ssh/sshd_config

- UseDNS选项:

打开状态下,当客户端试图登录SSH服务器时,服务器端先根据客户端的IP地址进行DNS PTR反向查询出客户端的主机名,然后根据查询出的客户端主机名进行DNS正向A记录查询,验证与其原始IP地址是否一致,这是防止客户端欺骗的一种措施,但一般我们的是动态IP不会有PTR记录,打开这个选项不过是在白白浪费时间而已,不如将其关闭。

- GSSAPIAuthentication:

当这个参数开启( GSSAPIAuthentication yes )的时候,通过SSH登陆服务器时候会有些会很慢!这是由于服务器端启用了GSSAPI。登陆的时候客户端需要对服务器端的IP地址进行反解析,如果服务器的IP地址没有配置PTR记录,那么就容易在这里卡住了。

(6)Linux内核调优

cat > /etc/sysctl.d/k8s.conf <<'EOF'

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv6.conf.all.disable_ipv6 = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system

3.内核升级

为了集群的稳定性和兼容性,生产环境的内核最好升级到4.18版本以上

(1)下载并安装内核软件包

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

yum -y localinstall kernel-ml*

(2)更改内核启动顺序

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"

grubby --default-kernel

(3)更新软件版本,但不需要更新内核,因为内核已经更新到了指定的版本

yum -y update --exclude=kernel*

4.安装ipvsadm

(1)安装ipvsadm等相关工具

yum -y install ipvsadm ipset sysstat conntrack libseccomp

(2)手动加载模块

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

(3)创建要开机自动加载的模块配置文件

cat > /etc/modules-load.d/ipvs.conf << 'EOF'

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF

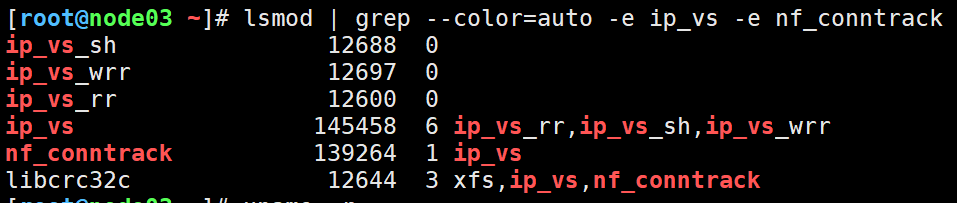

(4)启动模块,如图所示,这是Linux 3.10.X系列的内核模块,并不是我们需要的!

lsmod | grep --color=auto -e ip_vs -e nf_conntrack

温馨提示:

在内核4.19+版本nf_conntrack_ipv4已经改为nf_conntrack,4.18以下版本使用nf_conntrack_ipv4即可

5.重启集群

(1)查看现有内核版本

uname -r

(2)检查默认加载的内核版本

grubby --default-kernel

(3)重启所有节点

reboot

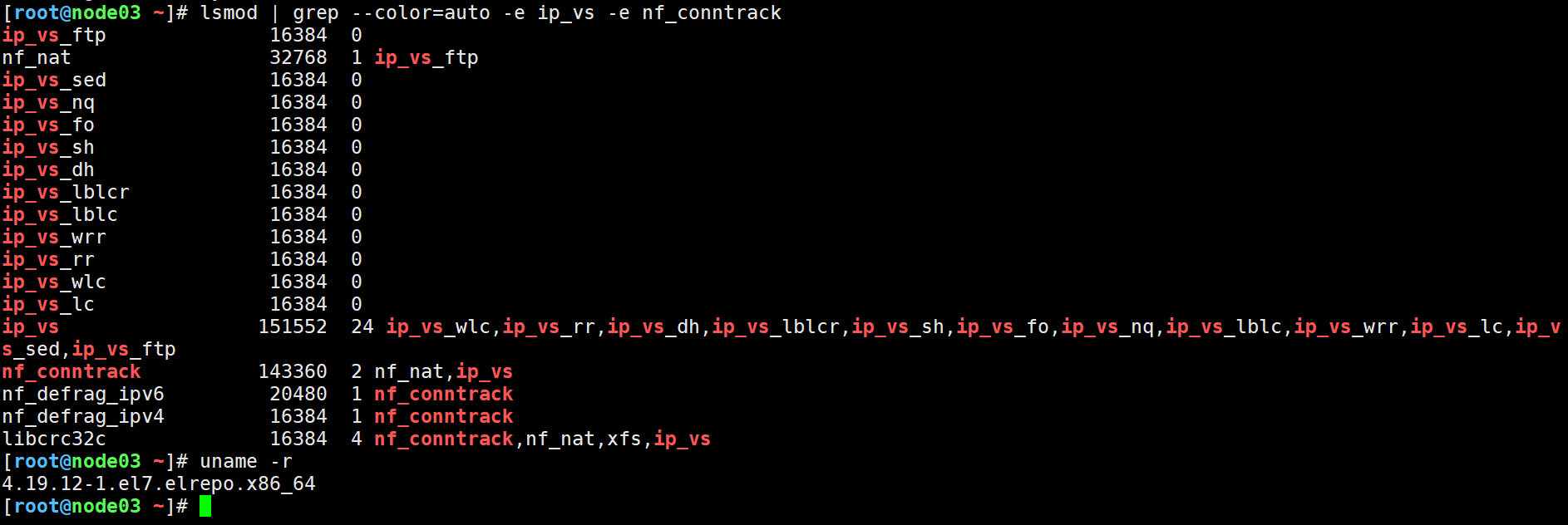

(4)检查支持ipvs的内核模块是否加载成功,如上图所示,支持了更多的内核参数。

lsmod | grep --color=auto -e ip_vs -e nf_conntrack

(5)再次查看内核版本

uname -r

升级后验证

6.部署containerd环境

#加载 containerd模块

cat >/etc/modules-load.d/containerd.conf<<'EOF'

overlay

br_netfilter

EOF

systemctl restart systemd-modules-load.service

cat >/etc/sysctl.d/99-kubernetes-cri.conf<<'EOF'

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

# 加载内核

sysctl --system

#获取阿里云YUM源

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#查看YUM源中Containerd软件

yum list | grep containerd

containerd.io.x86_64 1.4.12-3.1.el7 docker-ce-stable

#下载安装:

yum install -y containerd.io

生成containerd的配置文件

#创建目录

mkdir /etc/containerd -p && containerd config default > /etc/containerd/config.toml

#生成配置文件

containerd config default > /etc/containerd/config.toml

#编辑配置文件

vim /etc/containerd/config.toml

-----

SystemdCgroup = false 改为 SystemdCgroup = true

# sandbox_image = "k8s.gcr.io/pause:3.6"

改为:

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.6"

#启动

systemctl enable --now containerd

systemctl status containerd

#验证

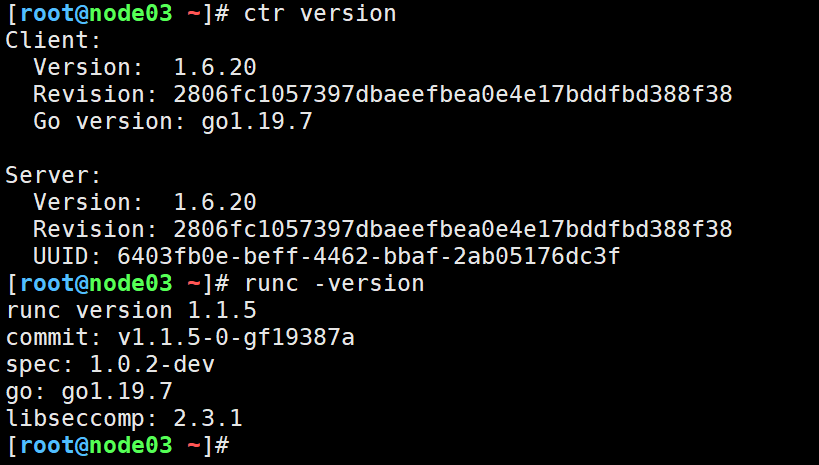

ctr version

runc -version

二、分发证书及配置文件

1.分发证书

master01执行

#发送组件到新加入的node节点

for NODE in node03 node04; do scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/ ; done

#发送证书到新加入的node节点

cd /etc/kubernetes/

for NODE in node03 node04; do

ssh $NODE mkdir -p /etc/kubernetes/pki /etc/etcd/ssl /etc/etcd/ssl

for FILE in etcd-ca.pem etcd.pem etcd-key.pem; do

scp /etc/etcd/ssl/$FILE $NODE:/etc/etcd/ssl/

done

for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig; do

scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}

done

done

温馨提示:

node节点使用自动颁发证书的形式配置

2.分发Kubelet

#将kubelet相关的配置文件发送到新加入的node节点

for NODE in node03 node04; do

ssh $NODE mkdir -p /var/lib/kubelet /var/log/kubernetes /etc/systemd/system/kubelet.service.d /etc/kubernetes/manifests/

scp /usr/lib/systemd/system/kubelet.service $NODE:/usr/lib/systemd/system/kubelet.service

scp /etc/systemd/system/kubelet.service.d/kubelet.conf $NODE:/etc/systemd/system/kubelet.service.d/kubelet.conf

scp /etc/kubernetes/kubelet-conf.yml $NODE:/etc/kubernetes/kubelet-conf.yml

ssh $NODE systemctl daemon-reload;systemctl enable --now kubelet

done

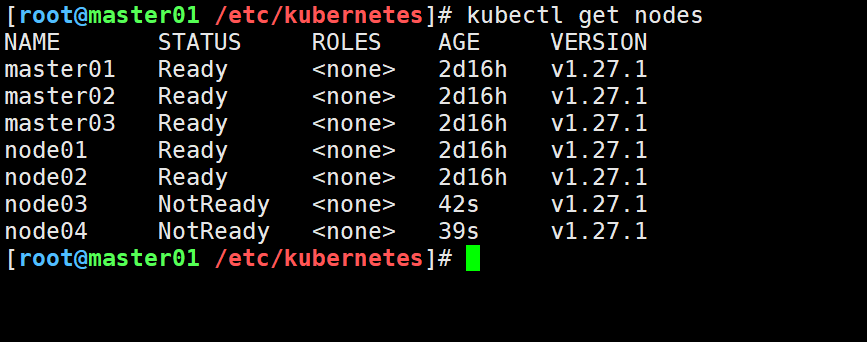

#master01查看集群状态

kubectl get nodes

3.分发kube-proxy

for NODE in node03 node04; do

scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

scp /etc/kubernetes/kube-proxy.conf $NODE:/etc/kubernetes/kube-proxy.conf

scp /etc/kubernetes/kube-proxy-config.yml $NODE:/etc/kubernetes/kube-proxy-config.yml

scp /usr/lib/systemd/system/kube-proxy.service $NODE:/usr/lib/systemd/system/kube-proxy.service

ssh node03 sed -i 's#master01#node03#g' /etc/kubernetes/kube-proxy-config.yml

ssh node04 sed -i 's#master01#node04#g' /etc/kubernetes/kube-proxy-config.yml

ssh node03 systemctl daemon-reload

ssh node03 systemctl enable --now kube-proxy

ssh node04 systemctl daemon-reload

ssh node04 systemctl enable --now kube-proxy

done

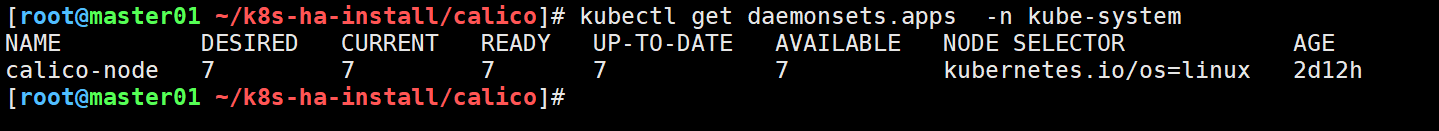

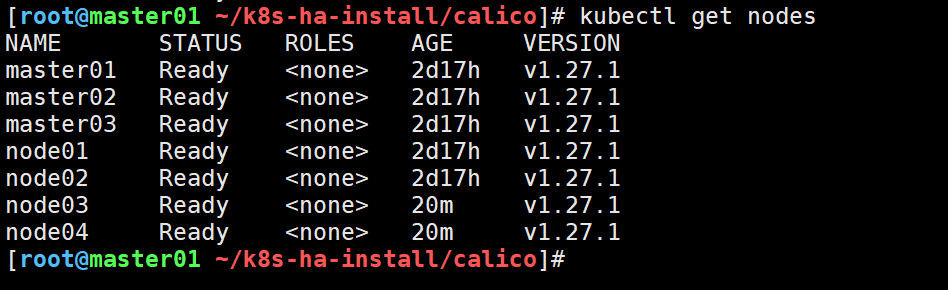

4.网络插件部署

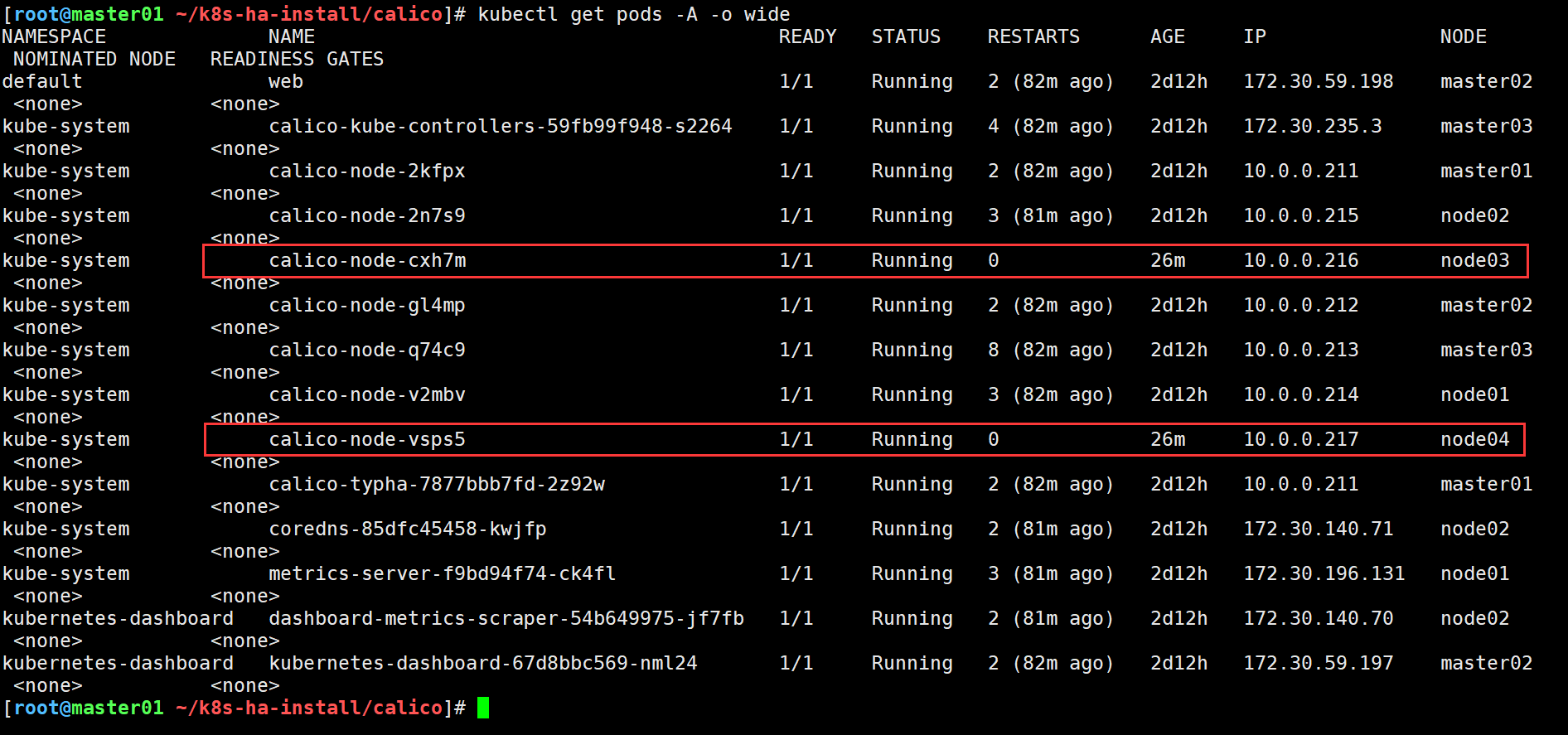

我这里部署的calico网络插件从下图可以看到是一个DaemonSet资源,会自动在集群的所有node添加pod,对应新加入集群的node,也会自动添加,

所以网络插件无需我们手动安装,集群会自动安装,等候一段时间后,节点会自动就绪

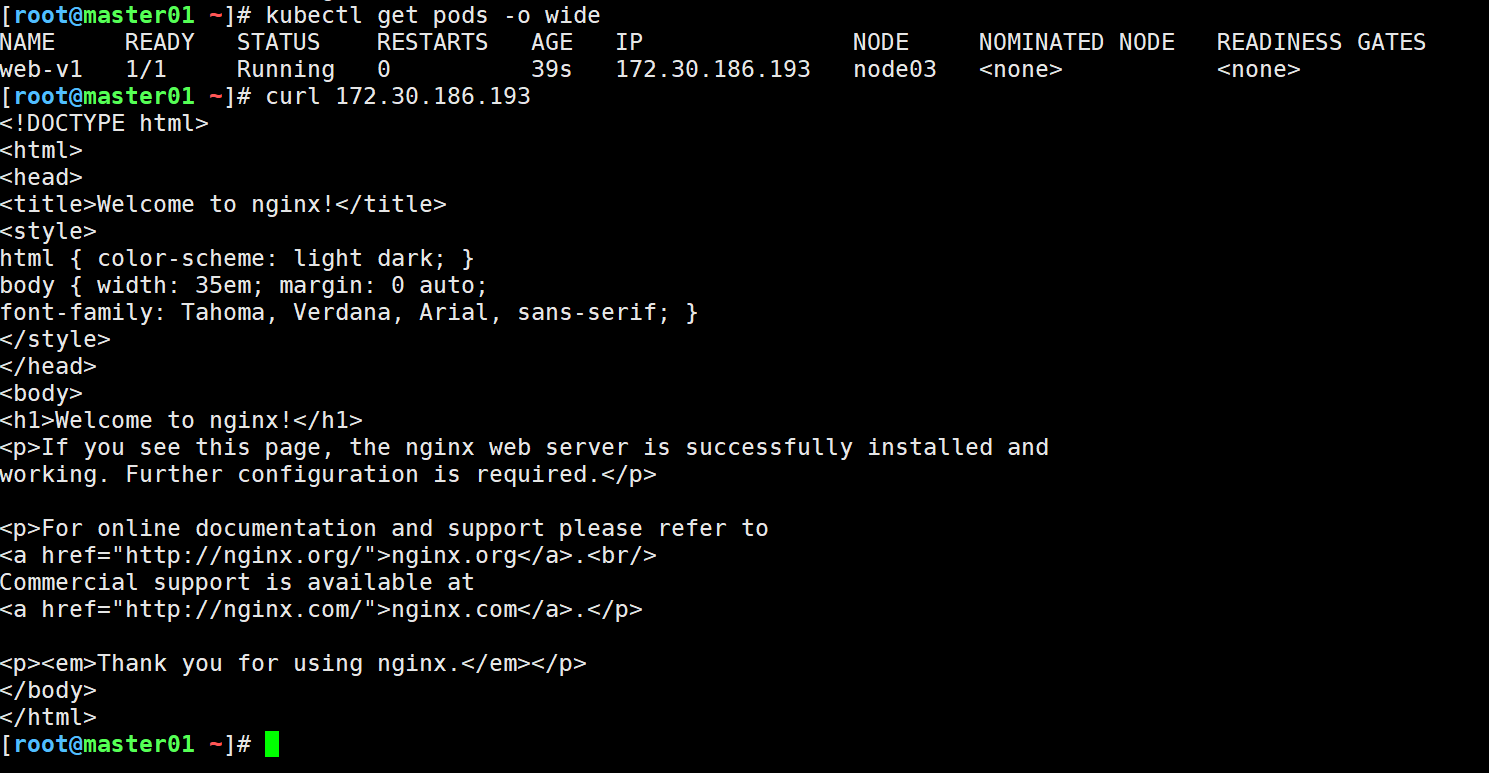

5.验证新加节点是否正常可用

#创建nginx的pod,指定调度到新加节点

cat >nginx.yaml<<'EOF'

apiVersion: v1

kind: Pod

metadata:

name: web-v1

spec:

nodeName: node03

containers:

- name: nginx-v1

image: nginx:1.21

EOF

kubectl apply -f nginx.yaml

#测试结果如下: