- etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>- etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

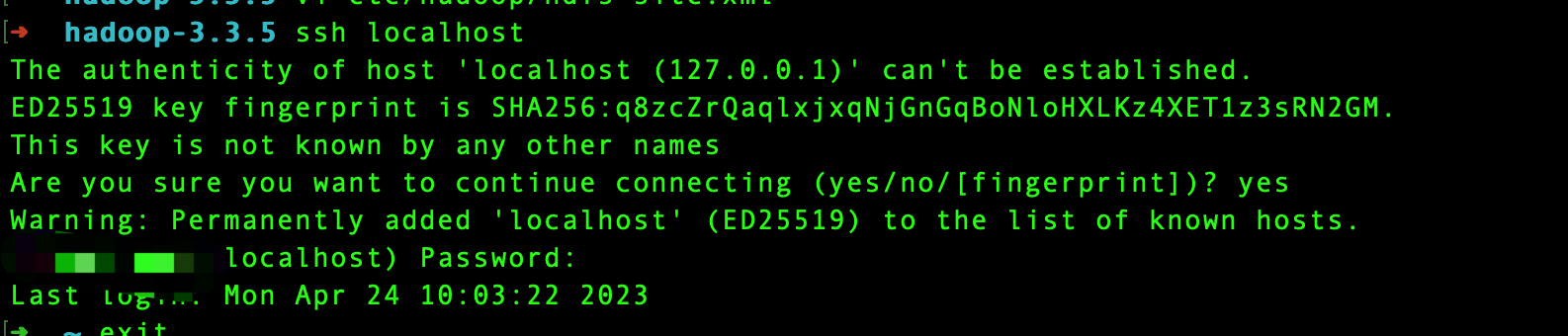

</configuration>ssh localhost

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys./bin/hdfs namenode -format./sbin/start-dfs.sh 文件操作

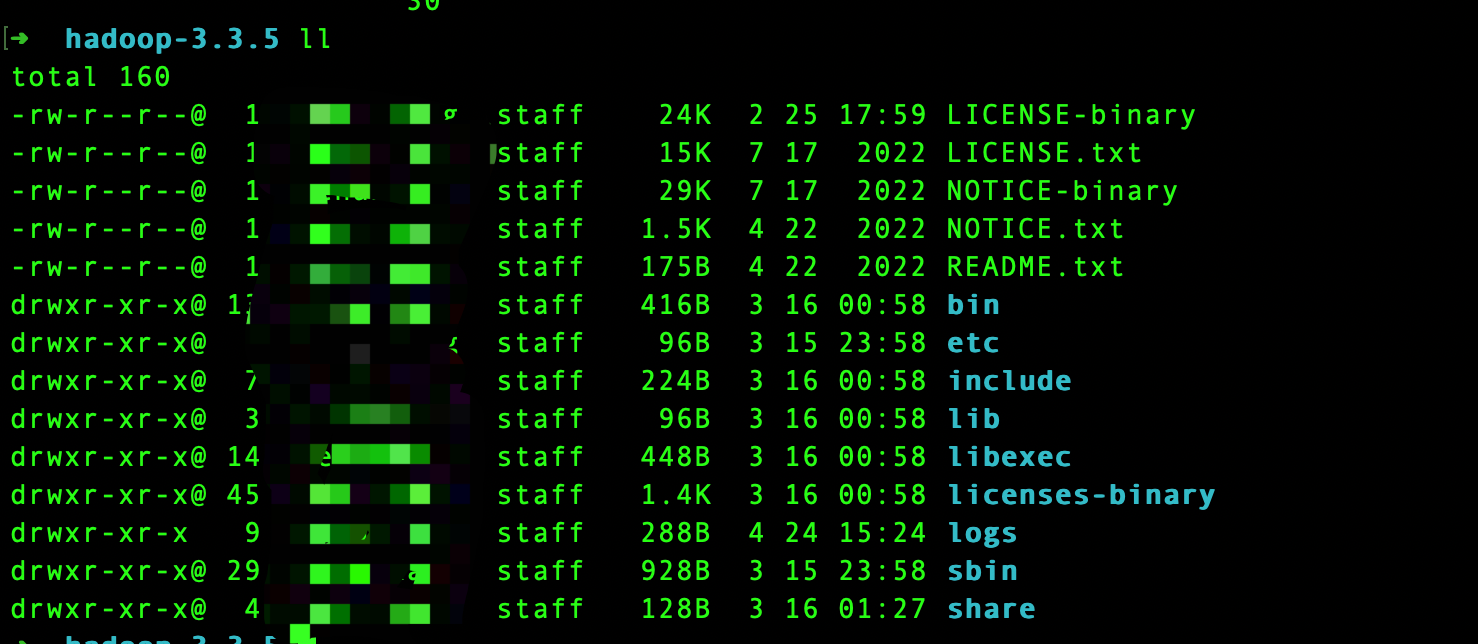

<properties>

<hadoop.version>3.3.5</hadoop.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

<scope>provided</scope>

</dependency>

</dependencies>

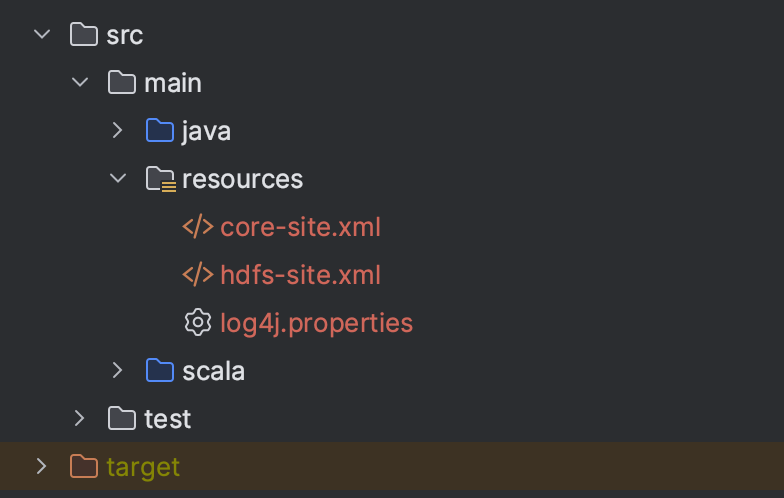

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.fs.{FileSystem, Path}

import java.nio.charset.StandardCharsets

object HdfsFileOperationTest {

def main(args: Array[String]): Unit = {

val conf = new Configuration()

conf.set("fs.defaultFs", "hdfs://localhost:9000")

val fs = FileSystem.get(conf)

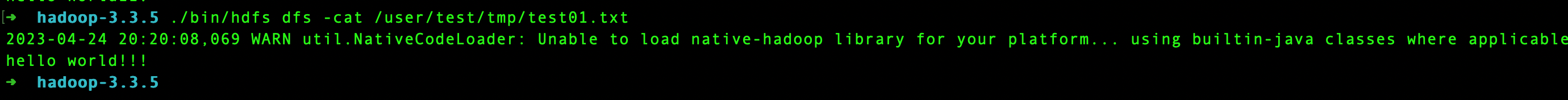

val path = new Path("/user/test/tmp/test01.txt")

//文件写入

val out = fs.create(path)

val str = "hello world!!!\n"

out.write(str.getBytes(StandardCharsets.UTF_8))

out.close()

//文件读取

val in = fs.open(path)

val bytes = new Array[Byte](1024)

val len = in.read(bytes)

println(new String(bytes, 0, len))

in.close()

}

}