ffmpeg 之 sdl

使用ffmpeg解码视频渲染到sdl窗口

前言

使用ffmpeg解码视频并渲染视频到窗口,网上是有不少例子的,但是大部分例子的细节都不是很完善,比如资源释放、flush解码缓存、多线程优化等都没有。特别是想要快速搭建一个demo时,总是要重新编写不少代码,比较不方便,所以在这里提供一个完善的例子,可以直接拷贝拿来使用。

一、ffmpeg解码

ffmpeg解码的流程是比较经典且通用的,基本上是文件、网络流、摄像头都是一模一样的流程。

1、打开输入流

首先需要打开输入流,输入流可以是文件、rtmp、rtsp、http等。

AVFormatContext* pFormatCtx = NULL;

const char* input="test.mp4";

//打开输入流

avformat_open_input(&pFormatCtx, input, NULL, NULL) ;

//查找输入流信息

avformat_find_stream_info(pFormatCtx, NULL) ; 2、查找视频流

因为是渲染视频,所以需要找到输入流中的视频流。通过遍历判断codec_type 为AVMEDIA_TYPE_VIDEO值的视频流。视频流有可能有多个的,这里我们取第一个。

//视频流的下标

int videoindex = -1;

for (unsigned i = 0; i < pFormatCtx->nb_streams; i++)

if (pFormatCtx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

videoindex = i;

break;

}3、打开解码器

通过输入流的信息获取到解码器参数然后查找到响应解码器,最后打开解码器即可。

AVCodecContext* pCodecCtx = NULL;

const AVCodec* pCodec = NULL;

//初始化解码上下文

pCodecCtx=avcodec_alloc_context3(NULL);

//获取解码参数

avcodec_parameters_to_context(pCodecCtx, pFormatCtx->streams[videoindex]->codecpar)

//查找解码器

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

//打开解码器

avcodec_open2(pCodecCtx, pCodec, &opts)打开解码器时可以使用多线程参数优化解码速度。

AVDictionary* opts = NULL;

//使用多线程解码

if (!av_dict_get(opts, "threads", NULL, 0))

av_dict_set(&opts, "threads", "auto", 0);4、解码

解码的流程就是读取输入流的包,对包进行解码,获取解码后的帧。

AVPacket packet;

AVFrame* pFrame = av_frame_alloc();

//读取包

while (av_read_frame(pFormatCtx, &packet) == 0)

{ //发送包

avcodec_send_packet(pCodecCtx, &packet);

//接收帧

while (avcodec_receive_frame(pCodecCtx, pFrame) == 0)

{

//取得解码后的帧pFrame

av_frame_unref(pFrame);

}

av_packet_unref(&packet);

}解码有个细节是需要注意的,即当av_read_frame到文件尾结束后,需要再次调用avcodec_send_packet传入NULL或者空包flush出里面的缓存帧。下面是完善的解码流程:

while (1)

{

int gotPacket = av_read_frame(pFormatCtx, &packet) == 0;

if (!gotPacket || packet.stream_index == videoindex)

//!gotPacket:未获取到packet需要将解码器的缓存flush,所以还需要进一次解码流程。

{

//发送包

if (avcodec_send_packet(pCodecCtx, &packet) < 0)

{

printf("Decode error.\n");

av_packet_unref(&packet);

goto end;

}

//接收解码的帧

while (avcodec_receive_frame(pCodecCtx, pFrame) == 0) {

//取得解码后的帧pFrame

av_frame_unref(pFrame);

}

}

av_packet_unref(&packet);

if (!gotPacket)

break;

}5、重采样

当遇到像素格式或分辨率与输出目标不一致时,就需要进行重采样了,重采样通常放在解码循环中。

struct SwsContext* swsContext = NULL;

enum AVPixelFormat forceFormat = AV_PIX_FMT_YUV420P;

uint8_t* outBuffer = NULL;

uint8_t* dst_data[4];

int dst_linesize[4];

if (forceFormat != pCodecCtx->pix_fmt)

{

swsContext = sws_getCachedContext(swsContext, pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height, forceFormat, SWS_FAST_BILINEAR, NULL, NULL, NULL);

if (!outBuffer)

outBuffer = av_malloc(av_image_get_buffer_size(forceFormat, pCodecCtx->width, pCodecCtx->height, 64));

av_image_fill_arrays(dst_data, dst_linesize, outBuffer, forceFormat, pCodecCtx->width, pCodecCtx->height, 1);

sws_scale(swsContext, pFrame->data, pFrame->linesize, 0, pFrame->height, dst_data, dst_linesize) ;

}6、销毁资源

使用完成后需要释放资源。

if (pFrame)

av_frame_free(&pFrame);

if (pCodecCtx)

{

avcodec_close(pCodecCtx);

avcodec_free_context(&pCodecCtx);

}

if (pFormatCtx)

avformat_close_input(&pFormatCtx);

if (pFormatCtx)

avformat_free_context(pFormatCtx);

if (swsContext)

sws_freeContext(swsContext);

av_dict_free(&opts);

if (outBuffer)

av_free(outBuffer);二、sdl渲染

1、初始化sdl

使用sdl前需要在最开始初始化sdl,全局只需要初始化一次即可。

if (SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

printf("Could not initialize SDL - %s\n", SDL_GetError());

return -1;

}2、创建窗口

直接调用SDL_CreateWindow即可,需要指定窗口标题、位置大小、以及一些标记,如下面示例是窗口gl窗口。

//创建窗口

SDL_Window* screen = SDL_CreateWindow("video play window", SDL_WINDOWPOS_UNDEFINED, SDL_WINDOWPOS_UNDEFINED,screen_w, screen_h,SDL_WINDOW_OPENGL);3、创建纹理

先创建窗口的渲染器然后通过渲染器创建后台纹理,纹理的大小与视频大小一致。另外需要指定纹理的像素格式,下列示例的SDL_PIXELFORMAT_IYUV与ffmpeg的AV_PIX_FMT_YUV420P对应

sdlRenderer = SDL_CreateRenderer(screen, -1, 0);

//创建和视频大小一样的纹理

sdlTexture = SDL_CreateTexture(sdlRenderer, SDL_PIXELFORMAT_IYUV, SDL_TEXTUREACCESS_STREAMING, pCodecCtx->width, pCodecCtx->height)4、渲染

渲染的时候需要指定窗口区域以及视频区域,然后将视频数据更新到后台纹理,后台纹理数据再转换到前台纹理,然后在进行显示。下面是渲染yuv420p的示例

//窗口区域

SDL_Rect sdlRect;

sdlRect.x = 0;

sdlRect.y = 0;

sdlRect.w = screen_w;

sdlRect.h = screen_h;

//视频区域

SDL_Rect sdlRect2;

sdlRect2.x = 0;

sdlRect2.y = 0;

sdlRect2.w = pCodecCtx->width;

sdlRect2.h = pCodecCtx->height;

//渲染到sdl窗口

SDL_RenderClear(sdlRenderer);

SDL_UpdateYUVTexture(sdlTexture, &sdlRect2, dst_data[0], dst_linesize[0], dst_data[1], dst_linesize[1], dst_data[2], dst_linesize[2]);

SDL_RenderCopy(sdlRenderer, sdlTexture, NULL, &sdlRect);

SDL_RenderPresent(sdlRenderer);5、销毁资源

使用完成后需要销毁资源,如下所示,SDL_Quit并不是必要的通常是程序退出才需要调用,这个时候调不调已经无所谓了。

if (sdlTexture)

SDL_DestroyTexture(sdlTexture);

if (sdlRenderer)

SDL_DestroyRenderer(sdlRenderer);

if (screen)

SDL_DestroyWindow(screen);

SDL_Quit();三、完整代码

将上述代码合并起来形成一个完整的视频解码渲染流程:

示例的sdk版本:ffmpeg 4.3、sdl2

windows、linux都可以正常运行

代码

#include <stdio.h>

#include <SDL.h>

#include "libavformat/avformat.h"

#include "libavcodec/avcodec.h"

#include "libswscale/swscale.h"

#include "libavutil/imgutils.h"

#undef main

int main(int argc, char** argv) {

const char* input = "D:\\FFmpeg\\test.mp4";

enum AVPixelFormat forceFormat = AV_PIX_FMT_YUV420P;

AVFormatContext* pFormatCtx = NULL;

AVCodecContext* pCodecCtx = NULL;

const AVCodec* pCodec = NULL;

AVDictionary* opts = NULL;

AVPacket packet;

AVFrame* pFrame = NULL;

struct SwsContext* swsContext = NULL;

uint8_t* outBuffer = NULL;

int videoindex = -1;

int exitFlag = 0;

int isLoop = 1;

double framerate;

int screen_w = 640, screen_h = 360;

SDL_Renderer* sdlRenderer = NULL;

SDL_Texture* sdlTexture = NULL;

//初始化SDL

if (SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

printf("Could not initialize SDL - %s\n", SDL_GetError());

return -1;

}

//创建窗口

SDL_Window* screen = SDL_CreateWindow("video play window", SDL_WINDOWPOS_UNDEFINED, SDL_WINDOWPOS_UNDEFINED,

screen_w, screen_h,

SDL_WINDOW_OPENGL);

if (!screen) {

printf("SDL: could not create window - exiting:%s\n", SDL_GetError());

return -1;

}

//打开输入流

if (avformat_open_input(&pFormatCtx, input, NULL, NULL) != 0) {

printf("Couldn't open input stream.\n");

goto end;

}

//查找输入流信息

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

printf("Couldn't find stream information.\n");

goto end;

}

//获取视频流

for (unsigned i = 0; i < pFormatCtx->nb_streams; i++)

if (pFormatCtx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

videoindex = i;

break;

}

if (videoindex == -1) {

printf("Didn't find a video stream.\n");

goto end;

}

//创建解码上下文

pCodecCtx = avcodec_alloc_context3(NULL);

if (pCodecCtx == NULL)

{

printf("Could not allocate AVCodecContext\n");

goto end;

}

//获取解码器

if (avcodec_parameters_to_context(pCodecCtx, pFormatCtx->streams[videoindex]->codecpar) < 0)

{

printf("Could not init AVCodecContext\n");

goto end;

}

pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if (pCodec == NULL) {

printf("Codec not found.\n");

goto end;

}

//使用多线程解码

if (!av_dict_get(opts, "threads", NULL, 0))

av_dict_set(&opts, "threads", "auto", 0);

//打开解码器

if (avcodec_open2(pCodecCtx, pCodec, &opts) < 0) {

printf("Could not open codec.\n");

goto end;

}

if (pCodecCtx->width == 0 || pCodecCtx->height == 0)

{

printf("Invalid video size.\n");

goto end;

}

if (pCodecCtx->pix_fmt == AV_PIX_FMT_NONE)

{

printf("Unknown pix foramt.\n");

goto end;

}

pFrame = av_frame_alloc();

framerate = (double)pFormatCtx->streams[videoindex]->avg_frame_rate.num / pFormatCtx->streams[videoindex]->avg_frame_rate.den;

start:

while (!exitFlag)

{

//读取包

int gotPacket = av_read_frame(pFormatCtx, &packet) == 0;

if (!gotPacket || packet.stream_index == videoindex)

//!gotPacket:未获取到packet需要将解码器的缓存flush,所以还需要进一次解码流程。

{

//发送包

if (avcodec_send_packet(pCodecCtx, &packet) < 0)

{

printf("Decode error.\n");

av_packet_unref(&packet);

goto end;

}

//接收解码的帧

while (avcodec_receive_frame(pCodecCtx, pFrame) == 0) {

uint8_t* dst_data[4];

int dst_linesize[4];

if (forceFormat != pCodecCtx->pix_fmt)

//重采样-格式转换

{

swsContext = sws_getCachedContext(swsContext, pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height, forceFormat, SWS_FAST_BILINEAR, NULL, NULL, NULL);

if (!outBuffer)

outBuffer =(uint8_t*) av_malloc(av_image_get_buffer_size(forceFormat, pCodecCtx->width, pCodecCtx->height, 64));

av_image_fill_arrays(dst_data, dst_linesize, outBuffer, forceFormat, pCodecCtx->width, pCodecCtx->height, 1);

if (sws_scale(swsContext, pFrame->data, pFrame->linesize, 0, pFrame->height, dst_data, dst_linesize) < 0)

{

printf("Call sws_scale error.\n");

av_frame_unref(pFrame);

av_packet_unref(&packet);

goto end;

}

}

else

{

memcpy(dst_data, pFrame->data, sizeof(uint8_t*) * 4);

memcpy(dst_linesize, pFrame->linesize, sizeof(int) * 4);

}

if (!sdlRenderer)

//初始化sdl纹理

{

sdlRenderer = SDL_CreateRenderer(screen, -1, 0);

if (!sdlRenderer)

{

printf("Create sdl renderer error.\n");

av_frame_unref(pFrame);

av_packet_unref(&packet);

goto end;

}

//创建和视频大小一样的纹理

sdlTexture = SDL_CreateTexture(sdlRenderer, SDL_PIXELFORMAT_IYUV, SDL_TEXTUREACCESS_STREAMING, pCodecCtx->width, pCodecCtx->height);

if (!sdlTexture)

{

printf("Create sdl texture error.\n");

av_frame_unref(pFrame);

av_packet_unref(&packet);

goto end;

}

}

//窗口区域

SDL_Rect sdlRect;

sdlRect.x = 0;

sdlRect.y = 0;

sdlRect.w = screen_w;

sdlRect.h = screen_h;

//视频区域

SDL_Rect sdlRect2;

sdlRect2.x = 0;

sdlRect2.y = 0;

sdlRect2.w = pCodecCtx->width;

sdlRect2.h = pCodecCtx->height;

//渲染到sdl窗口

SDL_RenderClear(sdlRenderer);

SDL_UpdateYUVTexture(sdlTexture, &sdlRect2, dst_data[0], dst_linesize[0], dst_data[1], dst_linesize[1], dst_data[2], dst_linesize[2]);

SDL_RenderCopy(sdlRenderer, sdlTexture, NULL, &sdlRect);

SDL_RenderPresent(sdlRenderer);

SDL_Delay(1000 / framerate);

av_frame_unref(pFrame);

//轮询窗口事件

SDL_Event sdl_event;

if (SDL_PollEvent(&sdl_event))

exitFlag = sdl_event.type == SDL_WINDOWEVENT && sdl_event.window.event == SDL_WINDOWEVENT_CLOSE;

}

}

av_packet_unref(&packet);

if (!gotPacket)

{

//循环播放时flush出缓存帧后需要调用此方法才能重新解码。

avcodec_flush_buffers(pCodecCtx);

break;

}

}

if (!exitFlag)

{

if (isLoop)

{

//定位到起点

if (avformat_seek_file(pFormatCtx, -1, 0, 0, 0, AVSEEK_FLAG_FRAME) >= 0)

{

goto start;

}

}

}

end:

//销毁资源

if (pFrame)

av_frame_free(&pFrame);

if (pCodecCtx)

{

avcodec_close(pCodecCtx);

avcodec_free_context(&pCodecCtx);

}

if (pFormatCtx)

avformat_close_input(&pFormatCtx);

if (pFormatCtx)

avformat_free_context(pFormatCtx);

if (swsContext)

sws_freeContext(swsContext);

av_dict_free(&opts);

if (outBuffer)

av_free(outBuffer);

if (sdlTexture)

SDL_DestroyTexture(sdlTexture);

if (sdlRenderer)

SDL_DestroyRenderer(sdlRenderer);

if (screen)

SDL_DestroyWindow(screen);

SDL_Quit();

return 0;

}

使用ffmpeg解码音频sdl(push)播放

前言

使用ffmpeg解码音频并使用sdl播放,网上还是有一些例子的,大多都不是特别完善,比如打开音频设备、音频重采样、使用push的方式播放音频等,都是有不少细节需要注意处理。尤其是使用push的方式播放音频,流程很简单完全可以使用单线程实现,但是队列数据长度比较难控制控制。而且有时想要快速搭建一个demo时,总是要重新编写不少代码,比较不方便,所以在这里提供一个完善的例子,可以直接拷贝拿来使用。

一、ffmpeg解码

ffmpeg解码的流程是比较经典且通用的,基本上是文件、网络流、本地设备都是一模一样的流程。

1、打开输入流

首先需要打开输入流,输入流可以是文件、rtmp、rtsp、http等。

AVFormatContext* pFormatCtx = NULL;

const char* input="test.mp4";

//打开输入流

avformat_open_input(&pFormatCtx, input, NULL, NULL) ;

//查找输入流信息

avformat_find_stream_info(pFormatCtx, NULL) ; 2、查找音频流

因为是音频播放,所以需要找到输入流中的音频流。通过遍历判断codec_type 为AVMEDIA_TYPE_AUDIO值的流。音频流有可能有多个的,这里我们取第一个。

//视频流的下标

int audioindex = -1;

for (unsigned i = 0; i < pFormatCtx->nb_streams; i++)

if (pFormatCtx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

audioindex = i;

break;

}3、打开解码器

通过输入流的信息获取到解码器参数然后查找到响应解码器,最后打开解码器即可。

AVCodecContext* pCodecCtx = NULL;

const AVCodec* pCodec = NULL;

//初始化解码上下文

pCodecCtx=avcodec_alloc_context3(NULL);

//获取解码参数

avcodec_parameters_to_context(pCodecCtx, pFormatCtx->streams[audioindex]->codecpar)

//查找解码器

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

//打开解码器

avcodec_open2(pCodecCtx, pCodec, &opts)打开解码器时可以使用多线程参数优化解码速度。

AVDictionary* opts = NULL;

//使用多线程解码

if (!av_dict_get(opts, "threads", NULL, 0))

av_dict_set(&opts, "threads", "auto", 0);4、解码

解码的流程就是读取输入流的包,对包进行解码,获取解码后的帧。

AVPacket packet;

AVFrame* pFrame = av_frame_alloc();

//读取包

while (av_read_frame(pFormatCtx, &packet) == 0)

{ //发送包

avcodec_send_packet(pCodecCtx, &packet);

//接收帧

while (avcodec_receive_frame(pCodecCtx, pFrame) == 0)

{

//取得解码后的帧pFrame

av_frame_unref(pFrame);

}

av_packet_unref(&packet);

}解码有个细节是需要注意的,即当av_read_frame到文件尾结束后,需要再次调用avcodec_send_packet传入NULL或者空包flush出里面的缓存帧。下面是完善的解码流程:

while (1)

{

int gotPacket = av_read_frame(pFormatCtx, &packet) == 0;

if (!gotPacket || packet.stream_index == audioindex)

//!gotPacket:未获取到packet需要将解码器的缓存flush,所以还需要进一次解码流程。

{

//发送包

if (avcodec_send_packet(pCodecCtx, &packet) < 0)

{

printf("Decode error.\n");

av_packet_unref(&packet);

goto end;

}

//接收解码的帧

while (avcodec_receive_frame(pCodecCtx, pFrame) == 0) {

//取得解码后的帧pFrame

av_frame_unref(pFrame);

}

}

av_packet_unref(&packet);

if (!gotPacket)

break;

}5、重采样

当遇到音频格式或采样率、声道数与输出目标不一致时,就需要进行重采样了,重采样通常放在解码循环中。

struct SwrContext* swr_ctx = NULL;

enum AVSampleFormatforceFormat = AV_SAMPLE_FMT_FLT;

uint8_t* data;

size_t dataSize;

if (forceFormat != pCodecCtx->sample_fmt|| spec.freq!= pFrame->sample_rate|| spec.channels!= pFrame->channels)

//重采样

{

//计算输入采样数

int out_count = (int64_t)pFrame->nb_samples * spec.freq / pFrame->sample_rate + 256;

//计算输出数据大小

int out_size = av_samples_get_buffer_size(NULL, spec.channels, out_count, forceFormat, 0);

//输入数据指针

const uint8_t** in = (const uint8_t**)pFrame->extended_data;

//输出缓冲区指针

uint8_t** out = &outBuffer;

int len2 = 0;

if (out_size < 0) {

av_log(NULL, AV_LOG_ERROR, "av_samples_get_buffer_size() failed\n");

goto end;

}

if (!swr_ctx)

//初始化重采样对象

{

swr_ctx = swr_alloc_set_opts(NULL, av_get_default_channel_layout(spec.channels), forceFormat, spec.freq, pCodecCtx->channel_layout, pCodecCtx->sample_fmt, pCodecCtx->sample_

if (!swr_ctx|| swr_init(swr_ctx) < 0) {

av_log(NULL, AV_LOG_ERROR, "swr_alloc_set_opts() failed\n");

goto end;

}

}

if (!outBuffer)

//申请输出缓冲区

{

outBuffer = (uint8_t*)av_mallocz(out_size);

}

//执行重采样

len2 = swr_convert(swr_ctx, out, out_count, in, pFrame->nb_samples);

if (len2 < 0) {

av_log(NULL, AV_LOG_ERROR, "swr_convert() failed\n");

goto end;

}

//取得输出数据

data = outBuffer;

//输出数据长度

dataSize = av_samples_get_buffer_size(0, spec.channels, len2, forceFormat, 1);

}6、销毁资源

使用完成后需要释放资源

//销毁资源

if (pFrame)

{

if (pFrame->format != -1)

{

av_frame_unref(pFrame);

}

av_frame_free(&pFrame);

}

if (packet.data)

{

av_packet_unref(&packet);

}

if (pCodecCtx)

{

avcodec_close(pCodecCtx);

avcodec_free_context(&pCodecCtx);

}

if (pFormatCtx)

avformat_close_input(&pFormatCtx);

if (pFormatCtx)

avformat_free_context(pFormatCtx);

swr_free(&swr_ctx);

av_dict_free(&opts);

if (outBuffer)

av_free(outBuffer);

二、sdl播放

1、初始化sdl

使用sdl前需要在最开始初始化sdl,全局只需要初始化一次即可。

if (SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

printf("Could not initialize SDL - %s\n", SDL_GetError());

return -1;

}2、打开音频设备

建议使用SDL_OpenAudioDevice打开设备,使用SDL_OpenAudio的话samples设置可能不生效,不利于push的方式播放。

SDL_AudioSpec wanted_spec, spec;

int audioId = 0;

//打开设备

wanted_spec.channels = av_get_channel_layout_nb_channels(pCodecCtx->channel_layout);

wanted_spec.freq = pCodecCtx->sample_rate;

wanted_spec.format = AUDIO_F32SYS;

wanted_spec.silence = 0;

wanted_spec.samples = FFMAX(512, 2 << av_log2(wanted_spec.freq / 30));

wanted_spec.callback = NULL;

wanted_spec.userdata = NULL;

audioId = SDL_OpenAudioDevice(NULL, 0, &wanted_spec, &spec, 1);

if (audioId < 2)

{

printf("Open audio device error!\n");

goto end;

}

//开启播放

SDL_PauseAudioDevice(audioId, 0);3、播放(push)

我们采用push的方式播放,即调用SDL_QueueAudio,将音频数据写入sdl内部维护的队列中,sdl会按照一定的频率读取队列数据并写入带音频设备。

SDL_QueueAudio(audioId, data, dataSize);4、销毁资源

使用完成后需要销毁资源,如下所示,SDL_Quit并不是必要的,通常是程序退出才需要调用,这个时候调不调已经无所谓了。

if (audioId >= 2)

SDL_CloseAudioDevice(audioId);

SDL_Quit();

三、队列长度控制

使用push(SDL_QueueAudio)的方式播放音频,通常会遇到一个问题:应该以什么频率往队列写入多少数据?如何保持队列长度稳定,且不会因为数据过少导致声音卡顿。通用以定量的方式是不可行的,基本都会出现数据量少卡顿或队列长度不断增长。这时候我们需要能够动态的控制队列长度,数据少了就写入快一些,数据过多就写入慢一些。

1、问题

写入过快或者慢都会出现问题。

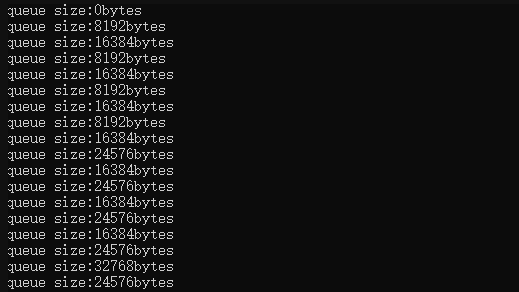

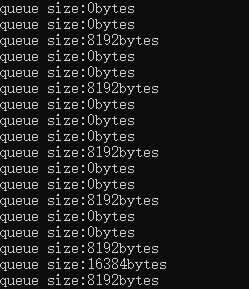

(1)、写入较快的情况

写入过快时队列长度不受控制的增长,如果播放时间足够长就会导致out of memory。

(2)、写入较慢的情况

写入过慢则会导致队列数据不足,sdl会自动补充静音包,呈现出来的结果就是播放的声音断断续续的。

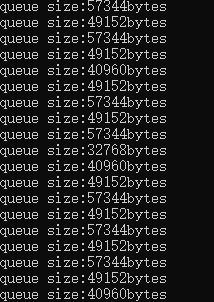

2、 解决方法

(1)、使用pid

比较简单的动态控制算法就是pid了,我们只需要根据当前队列的长度计算出需要调整的延时,即能够控制队列长度:(示例)

//目标队列长度

double targetSize;

//当前队列长度

int size;

error_p = targetSize - size;

error_i += error_p;

error_d = error_p - error_dp;

error_dp = error_p;

size = (kp * error_p + ki * error_i + kd * error_d);

//将targetSize - size转换成时长就是延时。

double delay;效果预览:

目标队列长度是49152bytes,基本在可控范围内波动。

四、完整代码

将上述代码合并起来形成一个完整的音频解码播放流程(不含pid):

示例的sdk版本:ffmpeg 4.3、sdl2

windows、linux都可以正常运行

代码

#include <stdio.h>

#include <SDL.h>

#include "libavformat/avformat.h"

#include "libavcodec/avcodec.h"

#include "libswscale/swscale.h"

#include "libswresample/swresample.h"

#undef main

int main(int argc, char** argv) {

const char* input = "test_music.wav";

enum AVSampleFormat forceFormat;

AVFormatContext* pFormatCtx = NULL;

AVCodecContext* pCodecCtx = NULL;

const AVCodec* pCodec = NULL;

AVDictionary* opts = NULL;

AVPacket packet;

AVFrame* pFrame = NULL;

struct SwrContext* swr_ctx = NULL;

uint8_t* outBuffer = NULL;

int audioindex = -1;

int exitFlag = 0;

int isLoop = 1;

SDL_AudioSpec wanted_spec, spec;

int audioId = 0;

memset(&packet, 0, sizeof(AVPacket));

//初始化SDL

if (SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

printf("Could not initialize SDL - %s\n", SDL_GetError());

return -1;

}

//打开输入流

if (avformat_open_input(&pFormatCtx, input, NULL, NULL) != 0) {

printf("Couldn't open input stream.\n");

goto end;

}

//查找输入流信息

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

printf("Couldn't find stream information.\n");

goto end;

}

//获取音频流

for (unsigned i = 0; i < pFormatCtx->nb_streams; i++)

if (pFormatCtx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_AUDIO) {

audioindex = i;

break;

}

if (audioindex == -1) {

printf("Didn't find a audio stream.\n");

goto end;

}

//创建解码上下文

pCodecCtx = avcodec_alloc_context3(NULL);

if (pCodecCtx == NULL)

{

printf("Could not allocate AVCodecContext\n");

goto end;

}

//获取解码器

if (avcodec_parameters_to_context(pCodecCtx, pFormatCtx->streams[audioindex]->codecpar) < 0)

{

printf("Could not init AVCodecContext\n");

goto end;

}

pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if (pCodec == NULL) {

printf("Codec not found.\n");

goto end;

}

//使用多线程解码

if (!av_dict_get(opts, "threads", NULL, 0))

av_dict_set(&opts, "threads", "auto", 0);

//打开解码器

if (avcodec_open2(pCodecCtx, pCodec, &opts) < 0) {

printf("Could not open codec.\n");

goto end;

}

if (pCodecCtx->sample_fmt == AV_SAMPLE_FMT_NONE)

{

printf("Unknown sample foramt.\n");

goto end;

}

if (pCodecCtx->sample_rate <= 0 || av_get_channel_layout_nb_channels(pFormatCtx->streams[audioindex]->codecpar->channels) <= 0)

{

printf("Invalid sample rate or channel count!\n");

goto end;

}

//打开设备

wanted_spec.channels = pFormatCtx->streams[audioindex]->codecpar->channels;

wanted_spec.freq = pCodecCtx->sample_rate;

wanted_spec.format = AUDIO_F32SYS;

wanted_spec.silence = 0;

wanted_spec.samples = FFMAX(512, 2 << av_log2(wanted_spec.freq / 30));

wanted_spec.callback = NULL;

wanted_spec.userdata = NULL;

audioId = SDL_OpenAudioDevice(NULL, 0, &wanted_spec, &spec, 1);

if (audioId < 2)

{

printf("Open audio device error!\n");

goto end;

}

switch (spec.format)

{

case AUDIO_S16SYS:

forceFormat = AV_SAMPLE_FMT_S16;

break;

case AUDIO_S32SYS:

forceFormat = AV_SAMPLE_FMT_S32;

break;

case AUDIO_F32SYS:

forceFormat = AV_SAMPLE_FMT_FLT;

break;

default:

printf("audio device format was not surported!\n");

goto end;

break;

}

pFrame = av_frame_alloc();

SDL_PauseAudioDevice(audioId, 0);

start:

while (!exitFlag)

{

//读取包

int gotPacket = av_read_frame(pFormatCtx, &packet) == 0;

if (!gotPacket || packet.stream_index == audioindex)

//!gotPacket:未获取到packet需要将解码器的缓存flush,所以还需要进一次解码流程。

{

//发送包

if (avcodec_send_packet(pCodecCtx, &packet) < 0)

{

printf("Decode error.\n");

av_packet_unref(&packet);

goto end;

}

//接收解码的帧

while (avcodec_receive_frame(pCodecCtx, pFrame) == 0) {

uint8_t* data;

size_t dataSize;

if (forceFormat != pCodecCtx->sample_fmt || spec.freq != pFrame->sample_rate || spec.channels != pFrame->channels)

//重采样

{

//计算输入采样数

int out_count = (int64_t)pFrame->nb_samples * spec.freq / pFrame->sample_rate + 256;

//计算输出数据大小

int out_size = av_samples_get_buffer_size(NULL, spec.channels, out_count, forceFormat, 0);

//输入数据指针

const uint8_t** in = (const uint8_t**)pFrame->extended_data;

//输出缓冲区指针

uint8_t** out = &outBuffer;

int len2 = 0;

if (out_size < 0) {

av_log(NULL, AV_LOG_ERROR, "av_samples_get_buffer_size() failed\n");

goto end;

}

if (!swr_ctx)

//初始化重采样对象

{

swr_ctx = swr_alloc_set_opts(NULL, av_get_default_channel_layout(spec.channels), forceFormat, spec.freq, av_get_default_channel_layout(pFormatCtx->streams[audioindex]->codecpar->channels), pCodecCtx->sample_fmt, pCodecCtx->sample_rate, 0, NULL);

if (!swr_ctx || swr_init(swr_ctx) < 0) {

av_log(NULL, AV_LOG_ERROR, "swr_alloc_set_opts() failed\n");

goto end;

}

}

if (!outBuffer)

//申请输出缓冲区

{

outBuffer = (uint8_t*)av_mallocz(out_size);

}

//执行重采样

len2 = swr_convert(swr_ctx, out, out_count, in, pFrame->nb_samples);

if (len2 < 0) {

av_log(NULL, AV_LOG_ERROR, "swr_convert() failed\n");

goto end;

}

//取得输出数据

data = outBuffer;

//输出数据长度

dataSize = av_samples_get_buffer_size(0, spec.channels, len2, forceFormat, 1);

}

else

{

data = pFrame->data[0];

dataSize = av_samples_get_buffer_size(pFrame->linesize, pFrame->channels, pFrame->nb_samples, forceFormat, 0);

}

//写入数据

SDL_QueueAudio(audioId, data, dataSize);

//延时,按照数据长度,-1是防止写入过慢卡顿

SDL_Delay((dataSize) * 1000.0 / (spec.freq * av_get_bytes_per_sample(forceFormat) * spec.channels) - 1);

int size = SDL_GetQueuedAudioSize(audioId);

printf("queue size:%dbytes\n", size);

}

}

av_packet_unref(&packet);

if (!gotPacket)

{

//循环播放时flush出缓存帧后需要调用此方法才能重新解码。

avcodec_flush_buffers(pCodecCtx);

break;

}

}

if (!exitFlag)

{

if (isLoop)

{

//定位到起点

if (avformat_seek_file(pFormatCtx, -1, 0, 0, 0, AVSEEK_FLAG_FRAME) >= 0)

{

goto start;

}

}

}

end:

//销毁资源

if (pFrame)

{

if (pFrame->format != -1)

{

av_frame_unref(pFrame);

}

av_frame_free(&pFrame);

}

if (packet.data)

{

av_packet_unref(&packet);

}

if (pCodecCtx)

{

avcodec_close(pCodecCtx);

avcodec_free_context(&pCodecCtx);

}

if (pFormatCtx)

avformat_close_input(&pFormatCtx);

if (pFormatCtx)

avformat_free_context(pFormatCtx);

swr_free(&swr_ctx);

av_dict_free(&opts);

if (outBuffer)

av_free(outBuffer);

if (audioId >= 2)

SDL_CloseAudioDevice(audioId);

SDL_Quit();

return 0;

}总结

以上就是今天要讲的内容,总的来说,使用ffmpeg解码音频sdl播放流程是基本与视频一致的,而且使用push的方式,相对与pull的方式,不需要使用额外的队列以及条件变量做访问控制。但是音频队列数据长度的控制也是一个难点,虽然本文使用pid达到了目的,但长度还是存在动态波动,需要继续调参或者调

使用ffmpeg解码音频sdl(pull)播放

前言

我们上一章讲了,ffmpeg解码sdl push的方式播放音频,调用流程简单,但是实现起来还是有点难度的。接下来讲的就是使用pull的方式播放音频,pull的方式即是使用回调的方式播放音频,在打开SDL音频设备的时候传入一个回调方法,SDL内部会按照一定频率回调这个方法,我们在回调方法中往音频缓冲写数据就能够播放声音了。

一、ffmpeg解码

ffmpeg解码部分与 《使用ffmpeg解码音频sdl(push)播放》 一致,这里就不再赘述。

二、sdl播放

1、初始化sdl

使用sdl前需要在最开始初始化sdl,全局只需要初始化一次即可。

if (SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

printf("Could not initialize SDL - %s\n", SDL_GetError());

return -1;

}2、打开音频设备

建议使用SDL_OpenAudioDevice打开设备,使用SDL_OpenAudio的话samples设置可能不生效,比较不灵活一点。pull的方式播放其实就是采用回调的方式播放,我们给下面代码的wanted_spec.callback赋值即可。

SDL_AudioSpec wanted_spec, spec;

int audioId = 0;

//打开设备

wanted_spec.channels = av_get_channel_layout_nb_channels(pCodecCtx->channel_layout);

wanted_spec.freq = pCodecCtx->sample_rate;

wanted_spec.format = AUDIO_F32SYS;

wanted_spec.silence = 0;

wanted_spec.samples = FFMAX(512, 2 << av_log2(wanted_spec.freq / 30));

//注册回调方法

wanted_spec.callback = audioCallback;

wanted_spec.userdata = NULL;

audioId = SDL_OpenAudioDevice(NULL, 0, &wanted_spec, &spec, 1);

if (audioId < 2)

{

printf("Open audio device error!\n");

goto end;

}

//开启播放

SDL_PauseAudioDevice(audioId, 0);3、播放(pull)

我们采用pull的方式播放,即注册回调方法,sdl会按照一定的频率触发回调,我们只需往回调参数的缓存指针写入音频数据即可。

//音频设备读取数据回调方法

void audioCallback(void* userdata, Uint8* stream, int len) {

//TODO:从音频队列中读取数据到设备缓冲

};4、销毁资源

使用完成后需要销毁资源,如下所示,SDL_Quit并不是必要的,通常是程序退出才需要调用,这个时候调不调已经无所谓了。

if (audioId >= 2)

{

SDL_PauseAudioDevice(audioId, 1);

SDL_CloseAudioDevice(audioId);

}

SDL_Quit();三、使用AVAudioFifo

由于是采用回调的方式播放,必然需要一个队列往里面写入解码的数据,音频设备回调时再将数据读出。我们直接使用ffmpeg提供的音频队列即可。

1、初始化

AVAudioFifo是基于采样数的,所以初始化的时候需要设置音频格式以及队列长度采样数。直接使用音频设备的参数,队列长度要稍微长一点。

//使用音频队列

AVAudioFifo *fifo = av_audio_fifo_alloc(forceFormat, spec.channels, spec.samples * 10);2、写入数据

我们需要解码处往队列里写入数据

//解码后的音频数据

uint8_t* data;

//音频数据的采样数

size_t samples;

if (av_audio_fifo_space(fifo) >= samples)

{

av_audio_fifo_write(fifo, (void**)&data, samples);

}3、读取数据

在音频设备回调中读取队列中的音频数据

void audioCallback(void* userdata, Uint8* stream, int len) {

//从音频队列中读取数据到设备缓冲

av_audio_fifo_read(fifo, (void**)&stream, spec.samples);

};4、销毁资源

if (fifo)

{

av_audio_fifo_free(fifo);

}四、完整代码

将上述代码合并起来形成一个完整的音频解码播放流程:

示例的sdk版本:ffmpeg 4.3、sdl2

windows、linux都可以正常运行

代码

#include <stdio.h>

#include <SDL.h>

#include "libavformat/avformat.h"

#include "libavcodec/avcodec.h"

#include "libswscale/swscale.h"

#include "libswresample/swresample.h"

#include "libavutil/audio_fifo.h"

#undef main

AVAudioFifo* fifo = NULL;

SDL_AudioSpec wanted_spec, spec;

SDL_mutex* mtx = NULL;

static void audioCallback(void* userdata, Uint8* stream, int len) {

//由于AVAudioFifo非线程安全,且是子线程触发此回调,所以需要加锁

SDL_LockMutex(mtx);

//读取队列中的音频数据

av_audio_fifo_read(fifo, (void**)&stream, spec.samples);

SDL_UnlockMutex(mtx);

};

int main(int argc, char** argv) {

const char* input = "test_music.wav";

enum AVSampleFormat forceFormat;

AVFormatContext* pFormatCtx = NULL;

AVCodecContext* pCodecCtx = NULL;

const AVCodec* pCodec = NULL;

AVDictionary* opts = NULL;

AVPacket packet;

AVFrame* pFrame = NULL;

struct SwrContext* swr_ctx = NULL;

uint8_t* outBuffer = NULL;

int audioindex = -1;

int exitFlag = 0;

int isLoop = 1;

double framerate;

int audioId = 0;

memset(&packet, 0, sizeof(AVPacket));

//初始化SDL

if (SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

printf("Could not initialize SDL - %s\n", SDL_GetError());

return -1;

}

//打开输入流

if (avformat_open_input(&pFormatCtx, input, NULL, NULL) != 0) {

printf("Couldn't open input stream.\n");

goto end;

}

//查找输入流信息

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

printf("Couldn't find stream information.\n");

goto end;

}

//获取音频流

for (unsigned i = 0; i < pFormatCtx->nb_streams; i++)

if (pFormatCtx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_AUDIO) {

audioindex = i;

break;

}

if (audioindex == -1) {

printf("Didn't find a audio stream.\n");

goto end;

}

//创建解码上下文

pCodecCtx = avcodec_alloc_context3(NULL);

if (pCodecCtx == NULL)

{

printf("Could not allocate AVCodecContext\n");

goto end;

}

//获取解码器

if (avcodec_parameters_to_context(pCodecCtx, pFormatCtx->streams[audioindex]->codecpar) < 0)

{

printf("Could not init AVCodecContext\n");

goto end;

}

pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if (pCodec == NULL) {

printf("Codec not found.\n");

goto end;

}

//使用多线程解码

if (!av_dict_get(opts, "threads", NULL, 0))

av_dict_set(&opts, "threads", "auto", 0);

//打开解码器

if (avcodec_open2(pCodecCtx, pCodec, &opts) < 0) {

printf("Could not open codec.\n");

goto end;

}

if (pCodecCtx->sample_fmt == AV_SAMPLE_FMT_NONE)

{

printf("Unknown sample foramt.\n");

goto end;

}

if (pCodecCtx->sample_rate <= 0 || pFormatCtx->streams[audioindex]->codecpar->channels <= 0)

{

printf("Invalid sample rate or channel count!\n");

goto end;

}

//打开设备

wanted_spec.channels = pFormatCtx->streams[audioindex]->codecpar->channels;

wanted_spec.freq = pCodecCtx->sample_rate;

wanted_spec.format = AUDIO_F32SYS;

wanted_spec.silence = 0;

wanted_spec.samples = FFMAX(512, 2 << av_log2(wanted_spec.freq / 30));

wanted_spec.callback = audioCallback;

wanted_spec.userdata = NULL;

audioId = SDL_OpenAudioDevice(NULL, 0, &wanted_spec, &spec, 1);

if (audioId < 2)

{

printf("Open audio device error!\n");

goto end;

}

switch (spec.format)

{

case AUDIO_S16SYS:

forceFormat = AV_SAMPLE_FMT_S16;

break;

case AUDIO_S32SYS:

forceFormat = AV_SAMPLE_FMT_S32;

break;

case AUDIO_F32SYS:

forceFormat = AV_SAMPLE_FMT_FLT;

break;

default:

printf("audio device format was not surported!\n");

goto end;

break;

}

pFrame = av_frame_alloc();

if (!pFrame)

{

printf("alloc frame failed!\n");

goto end;

}

//使用音频队列

fifo = av_audio_fifo_alloc(forceFormat, spec.channels, spec.samples * 10);

if (!fifo)

{

printf("alloc fifo failed!\n");

goto end;

}

mtx = SDL_CreateMutex();

if (!mtx)

{

printf("alloc mutex failed!\n");

goto end;

}

SDL_PauseAudioDevice(audioId, 0);

start:

while (!exitFlag)

{

//读取包

int gotPacket = av_read_frame(pFormatCtx, &packet) == 0;

if (!gotPacket || packet.stream_index == audioindex)

//!gotPacket:未获取到packet需要将解码器的缓存flush,所以还需要进一次解码流程。

{

//发送包

if (avcodec_send_packet(pCodecCtx, &packet) < 0)

{

printf("Decode error.\n");

av_packet_unref(&packet);

goto end;

}

//接收解码的帧

while (avcodec_receive_frame(pCodecCtx, pFrame) == 0) {

uint8_t* data;

size_t dataSize;

size_t samples;

if (forceFormat != pCodecCtx->sample_fmt || spec.freq != pFrame->sample_rate || spec.channels != pFrame->channels)

//重采样

{

//计算输入采样数

int out_count = (int64_t)pFrame->nb_samples * spec.freq / pFrame->sample_rate + 256;

//计算输出数据大小

int out_size = av_samples_get_buffer_size(NULL, spec.channels, out_count, forceFormat, 0);

//输入数据指针

const uint8_t** in = (const uint8_t**)pFrame->extended_data;

//输出缓冲区指针

uint8_t** out = &outBuffer;

int len2 = 0;

if (out_size < 0) {

av_log(NULL, AV_LOG_ERROR, "av_samples_get_buffer_size() failed\n");

goto end;

}

if (!swr_ctx)

//初始化重采样对象

{

swr_ctx = swr_alloc_set_opts(NULL, av_get_default_channel_layout(spec.channels), forceFormat, spec.freq, av_get_default_channel_layout(pFormatCtx->streams[audioindex]->codecpar->channels) , pCodecCtx->sample_fmt, pCodecCtx->sample_rate, 0, NULL);

if (!swr_ctx || swr_init(swr_ctx) < 0) {

av_log(NULL, AV_LOG_ERROR, "swr_alloc_set_opts() failed\n");

goto end;

}

}

if (!outBuffer)

//申请输出缓冲区

{

outBuffer = (uint8_t*)av_mallocz(out_size);

}

//执行重采样

len2 = swr_convert(swr_ctx, out, out_count, in, pFrame->nb_samples);

if (len2 < 0) {

av_log(NULL, AV_LOG_ERROR, "swr_convert() failed\n");

goto end;

}

//取得输出数据

data = outBuffer;

//输出数据长度

dataSize = av_samples_get_buffer_size(0, spec.channels, len2, forceFormat, 1);

samples = len2;

}

else

{

data = pFrame->data[0];

dataSize = av_samples_get_buffer_size(pFrame->linesize, pFrame->channels, pFrame->nb_samples, forceFormat, 0);

samples = pFrame->nb_samples;

}

//写入队列

while (1) {

SDL_LockMutex(mtx);

if (av_audio_fifo_space(fifo) >= samples)

{

av_audio_fifo_write(fifo, (void**)&data, samples);

SDL_UnlockMutex(mtx);

break;

}

SDL_UnlockMutex(mtx);

//队列可用空间不足则延时等待

SDL_Delay((dataSize) * 1000.0 / (spec.freq * av_get_bytes_per_sample(forceFormat) * spec.channels) - 1);

}

}

}

av_packet_unref(&packet);

if (!gotPacket)

{

//循环播放时flush出缓存帧后需要调用此方法才能重新解码。

avcodec_flush_buffers(pCodecCtx);

break;

}

}

if (!exitFlag)

{

if (isLoop)

{

//定位到起点

if (avformat_seek_file(pFormatCtx, -1, 0, 0, 0, AVSEEK_FLAG_FRAME) >= 0)

{

goto start;

}

}

}

end:

//销毁资源

if (fifo)

{

av_audio_fifo_free(fifo);

}

if (audioId >= 2)

{

SDL_PauseAudioDevice(audioId, 1);

SDL_CloseAudioDevice(audioId);

}

if (mtx)

{

SDL_DestroyMutex(mtx);

}

if (pFrame)

{

if (pFrame->format != -1)

{

av_frame_unref(pFrame);

}

av_frame_free(&pFrame);

}

if (packet.data)

{

av_packet_unref(&packet);

}

if (pCodecCtx)

{

avcodec_close(pCodecCtx);

avcodec_free_context(&pCodecCtx);

}

if (pFormatCtx)

avformat_close_input(&pFormatCtx);

if (pFormatCtx)

avformat_free_context(pFormatCtx);

swr_free(&swr_ctx);

av_dict_free(&opts);

if (outBuffer)

av_free(outBuffer);

SDL_Quit();

return 0;

}总结

以上就是今天要讲的内容,使用pull的方式播发音频比push简单很多,而且更加灵活可以继续实现更复杂的功能比如多路音频合并,使用push则难以实现。我们唯一要注意的就是保证线程安全,对队列的读写也非常简单,长度控制直接通过延时就可以做到。

使用ffmpeg和sdl播放视频实现时钟同步

前言

使用ffmpeg和sdl实现播放视频后,需要再实现时钟同步才能正常的播放视频,尤其是有音频的情况,我们通常需要将视频同步到音频来确保音画同步。视频的时钟同步有时是很难理解的,甚至知道了理论并不能确保实现,需要通过实践获取各种参数以及具体的实现逻辑。本文将介绍一些视频时钟同步的具体实现方式。

一、直接延时

我们播放视频是可以直接延时的,这种方式比较不准确,但是也算是一种初级的方法。

1、根据帧率延时

每渲染一帧都进行一个固定的延时,这个延时的时间是通过帧率计算得来。

//获取视频帧率

AVRational framerate = play->formatContext->streams[video->decoder.streamIndex]->avg_frame_rate;

//根据帧率计算出一帧延时

double duration = (double)framerate.num / framerate.den;

//显示视频

略

//延时

av_usleep(duration* 1000000);2、根据duration延时

每渲染一帧根据其duration进行延时,这个duration在视频的封装格式中通常会包含。

//获取当前帧的持续时间,下列方法有可能会无法获取duration,还有其他方法获取duration这里不具体说明,比如ffplay通过缓存多帧,来计算duartion。或者自己实现多帧估算duration。

AVRational timebase = play->formatContext->streams[video->decoder.streamIndex]->time_base;

double duration = frame->pkt_duration * (double)timebase.num / timebase.den;

//显示视频

略

//延时

av_usleep(duration* 1000000);二、同步到时钟

注:这一部分讲的只有视频时钟同步不涉及音频。

上面的简单延时一定程度可以满足播放需求,但也有问题,不能确保时间的准确性。尤其是解复用解码也会消耗时间,单纯的固定延时会导致累计延时。这个时候我们就需要一个时钟来校准每一帧的播放时间。

1、同步到绝对时钟

比较简单的方式是按绝对的方式同步到时钟,即视频有多长,开始之后一定是按照系统时间的长度播放完成,如果视频慢了则会不断丢帧,直到追上时间。

定义一个视频起始时间,在播放循环之外的地方。

//视频起始时间,单位为秒

double videoStartTime=0;播放循环中进行时钟同步。下列代码的frame为解码的AVFrame。

//以下变量时间单位为s

//当前时间

double currentTime = av_gettime_relative() / 1000000.0 - videoStartTime;

//视频帧的时间

double pts = frame->pts * (double)timebase.num / timebase.den;

//计算时间差,大于0则late,小于0则early。

double diff = currentTime - pts;

//视频帧的持续时间,下列方法有可能会无法获取duration,还有其他方法获取duration这里不具体说明,比如ffplay通过缓存多帧,来计算duartion。

double duration = frame->pkt_duration * (double)timebase.num / timebase.den;

//大于阈值,修正时间,时钟和视频帧偏差超过0.1s时重新设置起点时间。

if (diff > 0.1)

{

videoStartTime = av_gettime_relative() / 1000000.0 - pts;

currentTime = pts;

diff = 0;

}

//时间早了延时

if (diff < 0)

{

//小于阈值,修正延时,避免延时过大导致程序卡死

if (diff< -0.1)

{

diff =-0.1;

}

av_usleep(-diff * 1000000);

currentTime = av_gettime_relative() / 1000000.0 -videoStartTime;

diff = currentTime - pts;

}

//时间晚了丢帧,duration为一帧的持续时间,在一个duration内是正常时间,加一个duration作为阈值来判断丢帧。

if (diff > 2 * duration)

{

av_frame_unref(frame);

av_frame_free(&frame);

//此处返回即不渲染,进行丢帧。也可以渲染追帧。

return;

}

//显示视频

略2、同步到视频时钟

同步到视频时钟就是,按照视频播放的pts为基准,每次渲染的时候都根据当前帧的pts更新视频时钟。与上面的差距只是多了最底部一行时钟更新代码。

//更新视频时钟

videoStartTime = av_gettime_relative() / 1000000.0 - pts;因为与上一节代码基本一致,所以不做具体说明,直接参考上一节说明即可。

//以下变量时间单位为s

//当前时间

double currentTime = av_gettime_relative() / 1000000.0 - videoStartTime;

//视频帧的时间

double pts = frame->pts * (double)timebase.num / timebase.den;

//计算时间差,大于0则late,小于0则early。

double diff = currentTime - pts;

//视频帧的持续时间,下列方法有可能会无法获取duration,还有其他方法获取duration这里不具体说明,比如ffplay通过缓存多帧,来计算duartion。

double duration = frame->pkt_duration * (double)timebase.num / timebase.den;

//大于阈值,修正时间,时钟和视频帧偏差超过0.1s时重新设置起点时间。

if (diff > 0.1)

{

videoStartTime = av_gettime_relative() / 1000000.0 - pts;

currentTime = pts;

diff = 0;

}

//时间早了延时

if (diff < 0)

{

//小于阈值,修正延时,避免延时过大导致程序卡死

if (diff< -0.1)

{

diff =-0.1;

}

av_usleep(-diff * 1000000);

currentTime = av_gettime_relative() / 1000000.0 - videoStartTime;

diff = currentTime - pts;

}

//时间晚了丢帧,duration为一帧的持续时间,在一个duration内是正常时间,加一个duration作为阈值来判断丢帧。

if (diff > 2 * duration)

{

av_frame_unref(frame);

av_frame_free(&frame);

//此处返回即不渲染,进行丢帧。也可以渲染追帧。

return;

}

//更新视频时钟

videoStartTime = av_gettime_relative() / 1000000.0 - pts;

//显示视频

略三、同步到音频

1.音频时钟的计算

要同步到音频我们首先得计算音频时钟,通过音频播放的数据长度可以计算出pts。

定义两个变量,音频的pts,以及音频时钟的起始时间startTime。

//下列变量单位为秒

double audioPts=0;

double audioStartTime=0;在sdl的音频播放回调中计算音频时钟。其中spec为SDL_AudioSpec是SDL_OpenAudioDevice的第四个参数。

//音频设备播放回调

static void play_audio_callback(void* userdata, uint8_t* stream, int len) {

//写入设备的音频数据长度

int dataSize;

//将数据拷贝到stream

略

//计算音频时钟

if (dataSize > 0)

{

//计算当前pts

audioPts+=(double) (dataSize)*/ (spec.freq * av_get_bytes_per_sample(forceFormat) * spec.channels);

//更新音频时钟

audioStartTime= = av_gettime_relative() / 1000000.0 -audioPts;

}

}2.同步到音频时钟

有了音频时钟后,我们需要将视频同步到音频,在二、2的基础上加入同步逻辑即可。

//同步到音频

double avDiff = 0;

avDiff = videoStartTime - audioStartTime;

diff += avDiff;完整代码

代码

//以下变量时间单位为s

//当前时间

double currentTime = av_gettime_relative() / 1000000.0 - videoStartTime;

//视频帧的时间

double pts = frame->pts * (double)timebase.num / timebase.den;

//计算时间差,大于0则late,小于0则early。

double diff = currentTime - pts;

//视频帧的持续时间,下列方法有可能会无法获取duration,还有其他方法获取duration这里不具体说明,比如ffplay通过缓存多帧,来计算duartion。

double duration = frame->pkt_duration * (double)timebase.num / timebase.den;

//同步到音频

double avDiff = 0;

avDiff = videoStartTime - audioStartTime;

diff += avDiff;

//大于阈值,修正时间,时钟和视频帧偏差超过0.1s时重新设置起点时间。

if (diff > 0.1)

{

videoStartTime = av_gettime_relative() / 1000000.0 - pts;

currentTime = pts;

diff = 0;

}

//时间早了延时

if (diff < 0)

{

//小于阈值,修正延时,避免延时过大导致程序卡死

if (diff< -0.1)

{

diff =-0.1;

}

av_usleep(-diff * 1000000);

currentTime = av_gettime_relative() / 1000000.0 - videoStartTime;

diff = currentTime - pts;

}

//时间晚了丢帧,duration为一帧的持续时间,在一个duration内是正常时间,加一个duration作为阈值来判断丢帧。

if (diff > 2 * duration)

{

av_frame_unref(frame);

av_frame_free(&frame);

//此处返回即不渲染,进行丢帧。也可以渲染追帧。

return;

}

//更新视频时钟

videoStartTime = av_gettime_relative() / 1000000.0 - pts;

//显示视频

略总结

就是今天要讲的内容,本文简单介绍了几种视频时钟同步的方法,不算特别难,但是在网上查找的资料比较少。可以参考的ffplay的实现也有点复杂,本文的实现部分借鉴了ffplay。本文实现的时钟同步还是可以继续优化的,比如用pid进行动态控制。以及duration的计算可以细化调整。

c语言 将音视频时钟同步封装成通用模块

前言

编写视频播放器时需要实现音视频的时钟同步,这个功能是不太容易实现的。虽然理论通常是知道的,但是不通过实际的调试很难写出可用的时钟同步功能。其实也是有可以参考的代码,ffplay中实现了3种同步,但实现逻辑较为复杂,比较难直接提取出来使用。笔者通过参考ffplay在自己的播放器中实现了时钟同步,参考《使用ffmpeg和sdl播放视频实现时钟同步》。在实现过程中发现此功能可以做成一个通用的模块,在任何音视频播放的场景都可以使用。

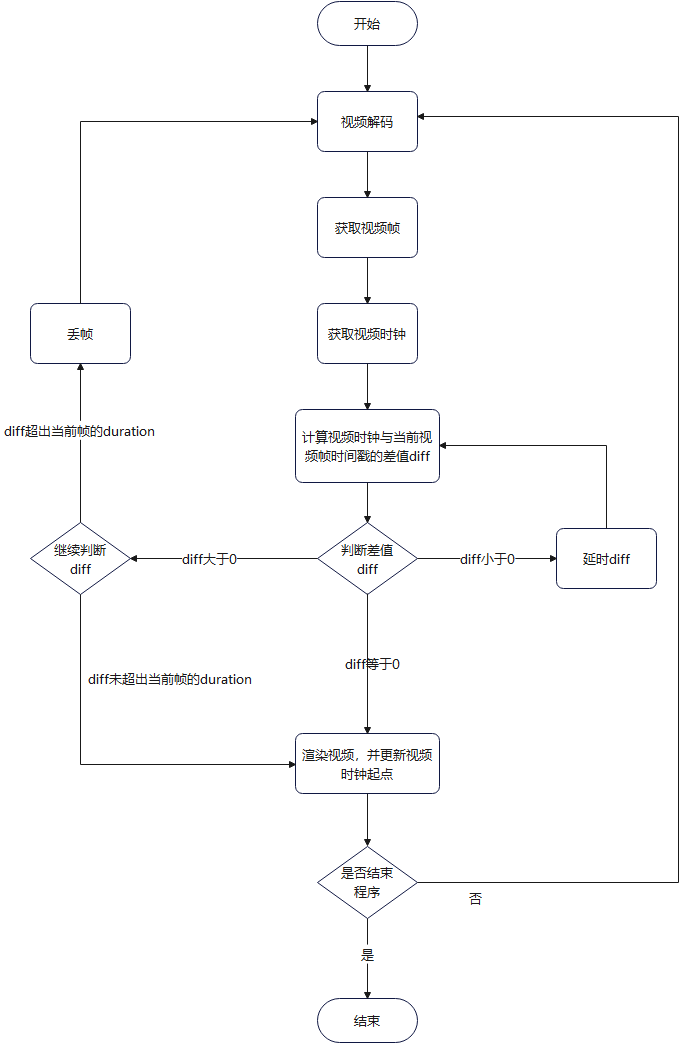

一、视频时钟

1、时钟计算方法

视频的时钟基于视频帧的时间戳,由于视频是通过一定的帧率渲染的,采用直接读取当前时间戳的方式获取时钟会造成一定的误差,精度不足。我们要获取准确连续的时间,应该使用一个系统的时钟,视频更新时记录时钟的起点,用系统时钟偏移后作为视频时钟,这样才能得到足够精度的时钟。流程如下:

每次渲染视频帧时,更新视频时钟起点:

视频时钟起点=系统时钟−视频时间戳

任意时刻获取视频时钟:

视频时钟=系统时钟−视频时钟起点

代码示例如下:

定义相关变量

//视频时钟起点,单位秒

double videoStartTime=0;更新视频时钟起点

//更新视频时钟(或者说矫正更准确)起点,pts为当前视频帧的时间戳,getCurrentTime为获取当前系统时钟的时间,单位秒

videoStartTime=getCurrentTime()-pts;获取视频时钟

//获取视频时钟,单位秒

double videoTime=getCurrentTime()-videoStartTime;2、同步视频时钟

有了上述时钟的计算方法,我们可以获得一个准确的视频时钟。为了确保视频能够在正确的时间渲染我们还需要进行视频渲染时的时钟同步。

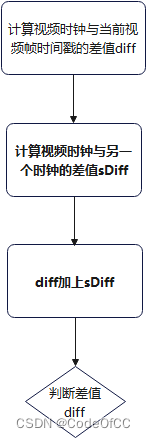

同步流程如下所示,流程图中的“更新视频时钟起点”和“获取视频时钟”与一节的计算方法直接对应。

核心代码示例

/// <summary>

/// 同步视频时钟

/// 视频帧显示前调用此方法,传入帧的pts和duration,根据此方法的返回值确定显示还是丢帧或者延时。

/// </summary>

/// <param name="pts">视频帧pts,单位为s</param>

/// <param name="duration">视频帧时长,单位为s。</param>

/// <returns>大于0则延时,值为延时时长,单位s。等于0显示。小于0丢帧</returns>

double synVideo(double pts, double duration)

{

if (videoStartTime== 0)

//初始化视频起点

{

videoStartTime= getCurrentTime() - pts;

}

//以下变量时间单位为s

//获取视频时钟

double currentTime = getCurrentTime() - videoStartTime;

//计算时间差,大于0则late,小于0则early。

double diff = currentTime - pts;

//时间早了延时

if (diff < -0.001)

{

if (diff < -0.1)

{

diff = -0.1;

}

return -diff;

}

//时间晚了丢帧,duration为一帧的持续时间,在一个duration内是正常时间,加一个duration作为阈值来判断丢帧。

if (diff > 2 * duration)

{

return -1;

}

//更新视频时钟起点

videoStartTime= getCurrentTime() - pts;

return 0;

}3、同步到另一个时钟

我实现了视频时钟的同步,但有时还需要视频同步到其他时钟,比如同步到音频时钟或外部时钟。将视频时钟同步到另外一个时钟很简单,在计算出视频时钟偏差diff后再加上视频时钟与另外一个时钟的差值就可以了。

上一节的流程图基础上添加如下加粗的步骤

上一节的代码加入如下内容

//计算时间差,大于0则late,小于0则early。

double diff = currentTime - pts;

//同步到另一个时钟-start

double sDiff = 0;

//anotherStartTime为另一个时钟的起始时间

sDiff = videoStartTime - anotherStartTime;

diff += sDiff;

//同步到另一个时钟-end

//时间早了延时

if (diff < -0.001)二、音频时钟

1、时钟计算方法

音频时钟的计算和视频时钟有点不一样,但结构上是差不多的,只是音频是通过通过数据长度来计算时间的。

(1)、时间公式

公式一

时长=音频数据长度(bytes)/(声道数∗采样率∗位深度/8)

代码如下:

//声道数

int channels=2;

//采样率

int samplerate=48000;

//位深

int bitsPerSample=32;

//数据长度

int dataSize=8192;

//时长,单位秒

double duration=(double)dataSize/(channels*samplerate*bitsPerSample/8);

//duration 值为:0.0426666...公式二

时长=采样数/采样率

代码如下:

//采样数

int samples=1024;

//采样率

int samplerate=48000;

//时长,单位秒

double duration=(double)samples/samplerate;

//duration值为0.021333333...(2)、计算方法

计算音频时钟首先需要记录音频的时间戳,计算音频时间戳需要将每次播放的音频数据转换成时长累加起来如下:(其中n表示累计的播放次数)

音频时间戳=i=0∑n音频数据长度(bytes)i/(声道数∗采样率∗位深度/8)

或者

音频时间戳=i=0∑n采样数i/采样率

有了音频时间戳就可可以计算出音频时钟的起点

音频时钟起点=系统时钟−音频时间戳

通过音频时钟起点就可以计算音频时钟了

音频时钟=系统时钟−音频时钟起点

代码示例:

定义相关变量

//音频时钟起点,单位秒

double audioStartTime=0;

//音频时间戳,单位秒

double currentPts=0;更新音频时钟(通过采样数)

//计算时间戳,samples为当前播放的采样数

currentPts += (double)samples / samplerate;

//计算音频起始时间

audioStartTime= getCurrentTime() - currentPts;更新音频时钟(通过数据长度)

currentPts += (double)bytesSize / (channels *samplerate *bitsPerSample/8);

//计算音频起始时间

audioStartTime= getCurrentTime() - currentPts;获取音频时钟

//获取音频时钟,单位秒

double audioTime= getCurrentTime() - audioStartTime;2、同步音频时钟

有了时间戳的计算方法,接下来就需要确定同步的时机,以确保计算的时钟是准确的。通常按照音频设备播放音频的耗时去更新音频时钟就是准确的。

我们根据上述计算过方法封装一个更新音频时钟的方法:

/// <summary>

/// 更新音频时钟

/// </summary>

/// <param name="samples">采样数</param>

/// <param name="samplerate">采样率</param>

/// <returns>应该播放的采样数</returns>

int synAudio(int samples, int samplerate) {

currentPts += (double)samples / samplerate;

//getCurrentTime为获取当前系统时钟的时间

audioStartTime= getCurrentTime() - currentPts;

return samples;

}

/// <summary>

/// 更新音频时钟,通过数据长度

/// </summary>

/// <param name="bytesSize">数据长度</param>

/// <param name="samplerate">采样率</param>

/// <param name="channels">声道数</param>

/// <param name="bitsPerSample">位深</param>

/// <returns>应该播放的采样数</returns>

int synAudioByBytesSize(size_t bytesSize, int samplerate, int channels, int bitsPerSample) {

return synAudio((double)bytesSize / (channels * bitsPerSample/8), samplerate) * (bitsPerSample/8)* channels;

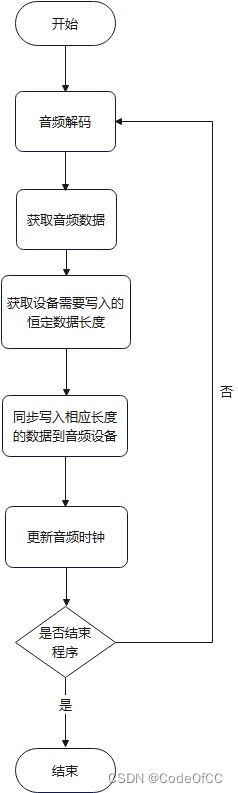

}(1)、阻塞式

播放音频的时候可以使用阻塞的方式,输入音频数据等待设备播放完成再返回,这个等待时间可以真实的反映音频设备播放音频数据的耗时,我们播放完成后更新音频时钟即可

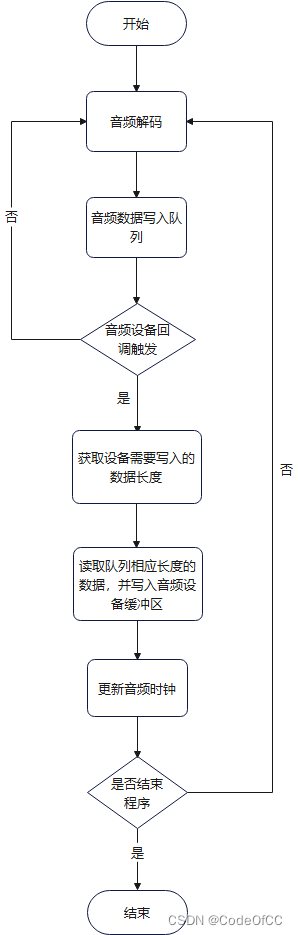

(2)、回调式

回调式的写入音频数据,比如sdl就有这种方式。我们在回调中更新音频时钟。

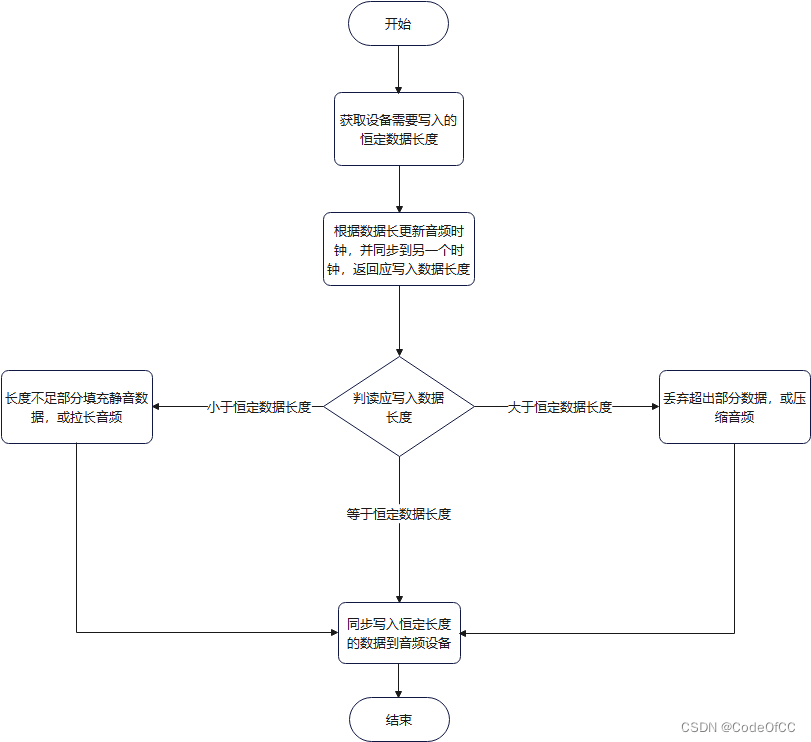

3、同步到另一个时钟

音频通常情况是不需要同步到另一个时钟的,因为音频的播放本身就不需要时钟校准。但是还是有一些场景需要音频同步到另一个时钟比如多轨道播放。

关键流程如下所示:

计算应写入数据长度的代码示例:(其中samples为音频设备需要写入的采样数)

//获取音频时钟当前时间

double audioTime = getCurrentTime() - audioStartTime;

double diff = 0;

//计算差值,getMasterTime获取另一个时钟的当前时间

diff = getMasterTime(syn) - audioTime;

int oldSamples = samples;

if (fabs(diff) > 0.01)

//超过偏差阈值,加上差值对应的采样数

{

samples += diff * samplerate;

}

if (samples < 0)

//最小以不播放等待

{

samples = 0;

}

if (samples > oldSamples * 2)

//最大以2倍速追赶

{

samples = oldSamples * 2;

}

//输出samples为写入数据长度,后面音频时钟计算也以此samples为准。三、外部时钟

播放视频的时候还可以参照一个外部的时钟,视频和音频都向外部时钟去同步。

1、绝对时钟

播放视频或者音频的时候,偶尔因为外部原因,比如系统卡顿、网络变慢、磁盘读写变慢会导致播放时间延迟了。如果一个1分钟的视频,播放过程中卡顿了几秒,那最终会在1分钟零几秒后才能播完视频。如果我们一定要在1分钟将视频播放完成,那就可以使用绝对时钟作为外部时钟。

本文采用的就是这种时钟,大致实现步骤如下:

视频开始播放-->设置绝对时钟起点-->视频同步到绝对时钟-->音频同步到绝对时钟四、封装成通用模块

完整代码

/************************************************************************

* @Project: Synchronize

* @Decription: 视频时钟同步模块

* 这是一个通用的视频时钟同步模块,支持3种同步方式:同步到视频时钟、同步到音频时钟、以及同步到外部时钟(绝对时钟)。

* 使用方法也比较简单,初始化之后在视频显示和音频播放处调用相应方法即可。

* 非线程安全:内部实现未使用线程安全机制。对于单纯的同步一般不用线程安全机制。当需要定位时可能需要一定的互斥操作。

* 没有特殊依赖(目前版本依赖了ffmpeg的获取系统时钟功能,如果换成c++则一行代码可以实现,否则需要宏区分实现各个平台的系统时钟获取)

* @Verision: v1.0

* @Author: Xin Nie

* @Create: 2022/9/03 14:55:00

* @LastUpdate: 2022/9/22 16:36:00

************************************************************************

* Copyright @ 2022. All rights reserved.

************************************************************************/

/// <summary>

/// 时钟对象

/// </summary>

typedef struct {

//起始时间

double startTime;

//当前pts

double currentPts;

}Clock;

/// <summary>

/// 时钟同步类型

/// </summary>

typedef enum {

//同步到音频

SYNCHRONIZETYPE_AUDIO,

//同步到视频

SYNCHRONIZETYPE_VIDEO,

//同步到绝对时钟

SYNCHRONIZETYPE_ABSOLUTE

}SynchronizeType;

/// <summary>

/// 时钟同步对象

/// </summary>

typedef struct {

/// <summary>

/// 音频时钟

/// </summary>

Clock audio;

/// <summary>

/// 视频时钟

/// </summary>

Clock video;

/// <summary>

/// 绝对时钟

/// </summary>

Clock absolute;

/// <summary>

/// 时钟同步类型

/// </summary>

SynchronizeType type;

/// <summary>

/// 估算的视频帧时长

/// </summary>

double estimateVideoDuration;

/// <summary>

/// 估算视频帧数

/// </summary>

double n;

}Synchronize;

/// <summary>

/// 返回当前时间

/// </summary>

/// <returns>当前时间,单位秒,精度微秒</returns>

static double getCurrentTime()

{

//此处用的是ffmpeg的av_gettime_relative。如果没有ffmpeg环境,则可替换成平台获取时钟的方法:单位为秒,精度需要微妙,相对绝对时钟都可以。

return av_gettime_relative() / 1000000.0;

}

/// <summary>

/// 重置时钟同步

/// 通常用于暂停、定位

/// </summary>

/// <param name="syn">时钟同步对象</param>

void synchronize_reset(Synchronize* syn) {

SynchronizeType type = syn->type;

memset(syn, 0, sizeof(Synchronize));

syn->type = type;

}

/// <summary>

/// 获取主时钟

/// </summary>

/// <param name="syn">时钟同步对象</param>

/// <returns>主时钟对象</returns>

Clock* synchronize_getMasterClock(Synchronize* syn) {

switch (syn->type)

{

case SYNCHRONIZETYPE_AUDIO:

return &syn->audio;

case SYNCHRONIZETYPE_VIDEO:

return &syn->video;

case SYNCHRONIZETYPE_ABSOLUTE:

return &syn->absolute;

default:

break;

}

return 0;

}

/// <summary>

/// 获取主时钟的时间

/// </summary>

/// <param name="syn">时钟同步对象</param>

/// <returns>时间,单位s</returns>

double synchronize_getMasterTime(Synchronize* syn) {

return getCurrentTime() - synchronize_getMasterClock(syn)->startTime;

}

/// <summary>

/// 设置时钟的时间

/// </summary>

/// <param name="syn">时钟同步对象</param>

/// <param name="pts">当前时间,单位s</param>

void synchronize_setClockTime(Synchronize* syn, Clock* clock, double pts)

{

clock->currentPts = pts;

clock->startTime = getCurrentTime() - pts;

}

/// <summary>

/// 获取时钟的时间

/// </summary>

/// <param name="syn">时钟同步对象</param>

/// <param name="clock">时钟对象</param>

/// <returns>时间,单位s</returns>

double synchronize_getClockTime(Synchronize* syn, Clock* clock)

{

return getCurrentTime() - clock->startTime;

}

/// <summary>

/// 更新视频时钟

/// </summary>

/// <param name="syn">时钟同步对象</param>

/// <param name="pts">视频帧pts,单位为s</param>

/// <param name="duration">视频帧时长,单位为s。缺省值为0,内部自动估算duration</param>

/// <returns>大于0则延时值为延时时长,等于0显示,小于0丢帧</returns>

double synchronize_updateVideo(Synchronize* syn, double pts, double duration)

{

if (duration == 0)

//估算duration

{

if (pts != syn->video.currentPts)

syn->estimateVideoDuration = (syn->estimateVideoDuration * syn->n + pts - syn->video.currentPts) / (double)(syn->n + 1);

duration = syn->estimateVideoDuration;

//只估算最新3帧

if (syn->n++ > 3)

syn->estimateVideoDuration = syn->n = 0;

if (duration == 0)

duration = 0.1;

}

if (syn->video.startTime == 0)

{

syn->video.startTime = getCurrentTime() - pts;

}

//以下变量时间单位为s

//当前时间

double currentTime = getCurrentTime() - syn->video.startTime;

//计算时间差,大于0则late,小于0则early。

double diff = currentTime - pts;

double sDiff = 0;

if (syn->type != SYNCHRONIZETYPE_VIDEO && synchronize_getMasterClock(syn)->startTime != 0)

//同步到主时钟

{

sDiff = syn->video.startTime - synchronize_getMasterClock(syn)->startTime;

diff += sDiff;

}

//时间早了延时

if (diff < -0.001)

{

if (diff < -0.1)

{

diff = -0.1;

}

return -diff;

}

syn->video.currentPts = pts;

//时间晚了丢帧,duration为一帧的持续时间,在一个duration内是正常时间,加一个duration作为阈值来判断丢帧。

if (diff > 2 * duration)

{

return -1;

}

//更新视频时钟

printf("video-time:%.3lfs audio-time:%.3lfs absolute-time:%.3lfs synDiff:%.4lfms diff:%.4lfms \r", pts, getCurrentTime() - syn->audio.startTime, getCurrentTime() - syn->absolute.startTime, sDiff * 1000, diff * 1000);

syn->video.startTime = getCurrentTime() - pts;

if (syn->absolute.startTime == 0)

//初始化绝对时钟

{

syn->absolute.startTime = syn->video.startTime;

}

return 0;

}

/// <summary>

/// 更新音频时钟

/// </summary>

/// <param name="syn">时钟同步对象</param>

/// <param name="samples">采样数</param>

/// <param name="samplerate">采样率</param>

/// <returns>应该播放的采样数</returns>

int synchronize_updateAudio(Synchronize* syn, int samples, int samplerate) {

if (syn->type != SYNCHRONIZETYPE_AUDIO && synchronize_getMasterClock(syn)->startTime != 0)

{

//同步到主时钟

double audioTime = getCurrentTime() - syn->audio.startTime;

double diff = 0;

diff = synchronize_getMasterTime(syn) - audioTime;

int oldSamples = samples;

if (fabs(diff) > 0.01) {

samples += diff * samplerate;

}

if (samples < 0)

{

samples = 0;

}

if (samples > oldSamples * 2)

{

samples = oldSamples * 2;

}

}

syn->audio.currentPts += (double)samples / samplerate;

syn->audio.startTime = getCurrentTime() - syn->audio.currentPts;

if (syn->absolute.startTime == 0)

//初始化绝对时钟

{

syn->absolute.startTime = syn->audio.startTime;

}

return samples;

}

/// <summary>

/// 更新音频时钟,通过数据长度

/// </summary>

/// <param name="syn">时钟同步对象</param>

/// <param name="bytesSize">数据长度</param>

/// <param name="samplerate">采样率</param>

/// <param name="channels">声道数</param>

/// <param name="bitsPerSample">位深</param>

/// <returns>应该播放的数据长度</returns>

int synchronize_updateAudioByBytesSize(Synchronize* syn, size_t bytesSize, int samplerate, int channels, int bitsPerSample) {

return synchronize_updateAudio(syn, bytesSize / (channels * bitsPerSample/8), samplerate) * (bitsPerSample /8)* channels;

}五、使用示例

1、基本用法

(1)、初始化

Synchronize syn;

memset(&syn,0,sizeof(Synchronize));(2)、设置同步类型

设置同步类型,默认不设置则为同步到音频

//同步到音频

syn->type=SYNCHRONIZETYPE_AUDIO;

//同步到视频

syn->type=SYNCHRONIZETYPE_VIDEO;

//同步到绝对时钟

syn->type=SYNCHRONIZETYPE_ABSOLUTE;(3)、视频同步

在视频渲染处调用,如果只有视频没有音频,需要注意将同步类型设置为SYNCHRONIZETYPE_VIDEO、或SYNCHRONIZETYPE_ABSOLUTE。

//当前帧的pts,单位s

double pts;

//当前帧的duration,单位s

double duration;

//视频同步

double delay =synchronize_updateVideo(&syn,pts,duration);

if (delay > 0)

//延时

{

//延时delay时长,单位s

}

else if (delay < 0)

//丢帧

{

}

else

//播放

{

}(4)、音频同步

在音频播放处调用

//将要写入的采样数

int samples;

//音频的采样率

int samplerate;

//时钟同步,返回的samples为实际写入的采样数,将要写入的采样数不能变,实际采样数需要压缩或拓展到将要写入的采样数。

samples = synchronize_updateAudio(&syn, samples, samplerate);(5)、获取播放时间

获取当前播放时间

//返回当前时钟,单位s。

double cursorTime=synchronize_getMasterTime(&syn);(6)、暂停

暂停后直接将时钟重置即可。但在重新开始播放之前将无法获取正确的播放时间。

void pause(){

//暂停逻辑

...

//重置时钟

synchronize_reset(&syn);

}(7)、定位

定位后直接将时钟重置即可,需要注意多线程情况下避免重置时钟后又被更新。

void seek(double pts){

//定位逻辑

...

//重置时钟

synchronize_reset(&syn);

}在音频解码或即将播放处,校正音频定位后的时间戳。

//音频定位后第一帧的时间戳。

double pts;

synchronize_setClockTime(&syn, &syn.audio, pts);总结

以上就是今天要讲的内容,本文简单介绍了音视频的时钟同步的原理以及具体实现,其中大部分原理参考了ffplay,做了一定的简化。本文提供的只是其中一种音视频同步方法,其他方法比如视频的同步可以直接替换时钟,视频直接参照给定的时钟去做同步也是可以的。音频时钟的同步策略比如采样数的计算也可以根据具体情况做调整。总的来说,这是一个通用的音视频同步模块,能够适用于一般的视频播放需求,可以很大程度的简化实现。

附录

1、获取系统时钟

由于完整代码的获取系统时钟的方法依赖于ffmpeg环境,考虑到不需要ffmpeg的情况下需要自己实现,这里贴出一些平台获取系统时钟的方法

(1)、Windows

#include<Windows.h>

/// <summary>

/// 返回当前时间

/// </summary>

/// <returns>当前时间,单位秒,精度微秒</returns>

static double getCurrentTime()

{

LARGE_INTEGER ticks, Frequency;

QueryPerformanceFrequency(&Frequency);

QueryPerformanceCounter(&ticks);

return (double)ticks.QuadPart / (double)Frequency.QuadPart;

}(2)、C++11

#include<chrono>

/// <summary>

/// 返回当前时间

/// </summary>

/// <returns>当前时间,单位秒,精度微秒</returns>

static double getCurrentTime()

{

return std::chrono::time_point_cast <std::chrono::nanoseconds>(std::chrono::high_resolution_clock::now()).time_since_epoch().count() / 1e+9;

}

使用ffmpeg实现单线程异步的视频播放器

前言

ffplay是一个不错的播放器,是基于多线程实现的,播放视频时一般至少有4个线程:读包线程、视频解码线程、音频解码线程、视频渲染线程。如果需要多路播放时,线程不可避免的有点多,比如需要播放8路视频时则需要32个线程,这样对性能的消耗还是比较大的。于是想到用单线程实现一个播放器,经过实践发现是可行的,播放本地文件时可以做到完全单线程、播放网络流时需要一个线程实现读包异步。

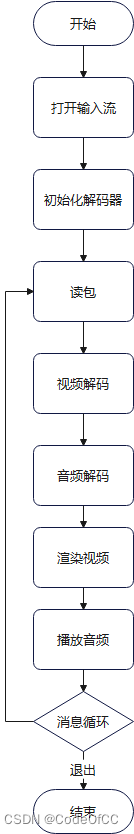

一、播放流程

二、关键实现

因为是基于单线程的播放器有些细节还是要注意的。

1.视频

(1)解码

解码时需要注意设置多线程解码或者硬解以确保解码速度,因为在单线程中解码过慢则会导致视频卡顿。

//使用多线程解码

if (!av_dict_get(opts, "threads", NULL, 0))

av_dict_set(&opts, "threads", "auto", 0);

//打开解码器

if (avcodec_open2(decoder->codecContext, codec, &opts) < 0) {

LOG_ERROR("Could not open codec");

av_dict_free(&opts);

return ERRORCODE_DECODER_OPENFAILED;

}或者根据情况设置硬解码器

codec = avcodec_find_decoder_by_name("hevc_qsv");

//打开解码器

if (avcodec_open2(decoder->codecContext, codec, &opts) < 0) {

LOG_ERROR("Could not open codec");

av_dict_free(&opts);

return ERRORCODE_DECODER_OPENFAILED;

}2、音频

(1)修正时钟

虽然音频的播放是基于流的,时钟也可以按照播放的数据量计算,但是出现丢包或者定位的一些情况时,按照数据量累计的方式会导致时钟不正确,所以在解码后的数据放入播放队列时应该进行时钟修正。synchronize_setClockTime参考《c语言 将音视频时钟同步封装成通用模块》。在音频解码之后:

//读取解码的音频帧

av_fifo_generic_read(play->audio.decoder.fifoFrame, &frame, sizeof(AVFrame*), NULL);

//同步(修正)时钟

AVRational timebase = play->formatContext->streams[audio->decoder.streamIndex]->time_base;

//当前帧的时间戳

double pts = (double)frame->pts * timebase.num / timebase.den;

//减去播放队列剩余数据的时长就是当前的音频时钟

pts -= (double)av_audio_fifo_size(play->audio.playFifo) / play->audio.spec.freq;

synchronize_setClockTime(&play->synchronize, &play->synchronize.audio, pts);

//同步(修正)时钟--end

//写入播放队列

av_audio_fifo_write(play->audio.playFifo, (void**)&data, samples);3、时钟同步

需要时钟同步的地方有3处,一处是音频解码后即上面的2、(1)。另外两处则是音频播放和视频渲染的地方。

(1)、音频播放

synchronize_updateAudio参考《c语言 将音视频时钟同步封装成通用模块》。

//sdl音频回调

static void audio_callback(void* userdata, uint8_t* stream, int len) {

Play* play = (Play*)userdata;

//需要写入的数据量

samples = play->audio.spec.samples;

//时钟同步,获取应该写入的数据量,如果是同步到音频,则需要写入的数据量始终等于应该写入的数据量。

samples = synchronize_updateAudio(&play->synchronize, samples, play->audio.spec.freq);

//略

}(2)、视频播放

在视频渲染处实现如下代码,其中synchronize_updateVideo参考《c语言 将音视频时钟同步封装成通用模块》。

//---------------时钟同步--------------

AVRational timebase = play->formatContext->streams[video->decoder.streamIndex]->time_base;

//计算视频帧的pts

double pts = frame->pts * (double)timebase.num / timebase.den;

//视频帧的持续时间

double duration = frame->pkt_duration * (double)timebase.num / timebase.den;

double delay = synchronize_updateVideo(&play->synchronize, pts, duration);

if (delay > 0)

//延时

{

play->wakeupTime = getCurrentTime() + delay;

return 0;

}

else if (delay < 0)

//丢帧

{

av_fifo_generic_read(video->decoder.fifoFrame, &frame, sizeof(AVFrame*), NULL);

av_frame_unref(frame);

av_frame_free(&frame);

return 0;

}

else

//播放

{

av_fifo_generic_read(video->decoder.fifoFrame, &frame, sizeof(AVFrame*), NULL);

}

//---------------时钟同步-------------- end4、异步读包

如果是本地文件单线程播放是完全没有问题的。但是播放网络流时,由于av_read_frame不是异步的,网络状况差时会导致延时过高影响到其他部分功能的正常进行,所以只能是将读包的操作放到子线程执行,这里采用async、await的思想实现异步。

(1)、async

将av_read_frame的放到线程池中执行。

//异步读取包,子线程中调用此方法

static int packet_readAsync(void* arg)

{

Play* play = (Play*)arg;

play->eofPacket = av_read_frame(play->formatContext, &play->packet);

//回到播放线程处理包

play_beginInvoke(play, packet_readAwait, play);

return 0;

}(2)、await

执行完成后通过消息队列通知播放器线程,将后续操作放在播放线程中执行

//异步读取包完成后的操作

static int packet_readAwait(void* arg)

{

Play* play = (Play*)arg;

if (play->eofPacket == 0)

{

if (play->packet.stream_index == play->video.decoder.streamIndex)

//写入视频包队

{

AVPacket* packet = av_packet_clone(&play->packet);

av_fifo_generic_write(play->video.decoder.fifoPacket, &packet, sizeof(AVPacket*), NULL);

}

else if (play->packet.stream_index == play->audio.decoder.streamIndex)

//写入音频包队

{

AVPacket* packet = av_packet_clone(&play->packet);

av_fifo_generic_write(play->audio.decoder.fifoPacket, &packet, sizeof(AVPacket*), NULL);

}

av_packet_unref(&play->packet);

}

else if (play->eofPacket == AVERROR_EOF)

{

play->eofPacket = 1;

//写入空包flush解码器中的缓存

AVPacket* packet = &play->packet;

if (play->audio.decoder.fifoPacket)

av_fifo_generic_write(play->audio.decoder.fifoPacket, &packet, sizeof(AVPacket*), NULL);

if (play->video.decoder.fifoPacket)

av_fifo_generic_write(play->video.decoder.fifoPacket, &packet, sizeof(AVPacket*), NULL);

}

else

{

LOG_ERROR("read packet erro!\n");

play->exitFlag = 1;

play->isAsyncReading = 0;

return ERRORCODE_PACKET_READFRAMEFAILED;

}

play->isAsyncReading = 0;

return 0;

}(3)、消息处理

在播放线程中调用如下方法,处理事件,当await方法抛入消息队列后,就可以通过消息循环获取await方法在播放线程中执行。

//事件处理

static void play_eventHandler(Play* play) {

PlayMessage msg;

while (messageQueue_poll(&play->mq, &msg)) {

switch (msg.type)

{

case PLAYMESSAGETYPE_INVOKE:

SDL_ThreadFunction fn = (SDL_ThreadFunction)msg.param1;

fn(msg.param2);

break;

}

}

}三、完整代码

完整代码c和c++都可以运行,使用ffmpeg4.3、sdl2。

main.c/cpp

代码

#include <stdio.h>

#include <stdint.h>

#include "SDL.h"

#include<stdint.h>

#include<string.h>

#ifdef __cplusplus

extern "C" {

#endif

#include "libavformat/avformat.h"

#include "libavcodec/avcodec.h"

#include "libswscale/swscale.h"

#include "libavutil/imgutils.h"

#include "libavutil/avutil.h"

#include "libavutil/time.h"

#include "libavutil/audio_fifo.h"

#include "libswresample/swresample.h"

#ifdef __cplusplus

}

#endif

/************************************************************************

* @Project: play

* @Decription: 视频播放器

* 这是一个播放器,基于单线程实现的播放器。如果是播放本地文件可以做到完全单线程,播放网络流则读取包的时候是异步的,当然

* 主流程依然是单线程。目前是读取包始终异步,未作判断本地文件同步读包处理。

* @Verision: v0.0.0

* @Author: Xin Nie

* @Create: 2022/12/12 21:21:00

* @LastUpdate: 2022/12/12 21:21:00

************************************************************************

* Copyright @ 2022. All rights reserved.

************************************************************************/

/// <summary>

/// 消息队列

/// </summary>

typedef struct {

//队列长度

int _capacity;

//消息对象大小

int _elementSize;

//队列

AVFifoBuffer* _queue;

//互斥变量

SDL_mutex* _mtx;

//条件变量

SDL_cond* _cv;

}MessageQueue;

/// <summary>

/// 对象池

/// </summary>

typedef struct {

//对象缓存

void* buffer;

//对象大小

int elementSize;

//对象个数

int arraySize;

//对象使用状态1使用,0未使用

int* _arrayUseState;

//互斥变量

SDL_mutex* _mtx;

//条件变量

SDL_cond* _cv;

}OjectPool;

/// <summary>

/// 线程池

/// </summary>

typedef struct {

//最大线程数

int maxThreadCount;

//线程信息对象池

OjectPool _pool;

}ThreadPool;

/// <summary>

/// 线程信息

/// </summary>

typedef struct {

//所属线程池

ThreadPool* _threadPool;

//线程句柄

SDL_Thread* _thread;

//消息队列

MessageQueue _queue;

//线程回调方法

SDL_ThreadFunction _fn;

//线程回调参数

void* _arg;

}ThreadInfo;

//解码器

typedef struct {

//解码上下文

AVCodecContext* codecContext;

//解码器

const AVCodec* codec;

//解码临时帧

AVFrame* frame;

//包队列

AVFifoBuffer* fifoPacket;

//帧队列

AVFifoBuffer* fifoFrame;

//流下标

int streamIndex;

//解码结束标记

int eofFrame;

}Decoder;

/// <summary>

/// 时钟对象

/// </summary>

typedef struct {

//起始时间

double startTime;

//当前pts

double currentPts;

}Clock;

/// <summary>

/// 时钟同步类型

/// </summary>

typedef enum {

//同步到音频

SYNCHRONIZETYPE_AUDIO,

//同步到视频

SYNCHRONIZETYPE_VIDEO,

//同步到绝对时钟

SYNCHRONIZETYPE_ABSOLUTE

}SynchronizeType;

/// <summary>

/// 时钟同步对象

/// </summary>

typedef struct {

/// <summary>

/// 音频时钟

/// </summary>

Clock audio;

/// <summary>

/// 视频时钟

/// </summary>

Clock video;

/// <summary>

/// 绝对时钟

/// </summary>

Clock absolute;

/// <summary>

/// 时钟同步类型

/// </summary>

SynchronizeType type;

/// <summary>

/// 估算的视频帧时长

/// </summary>

double estimateVideoDuration;

/// <summary>

/// 估算视频帧数

/// </summary>

double n;

}Synchronize;

//视频模块

typedef struct {

//解码器

Decoder decoder;

//输出格式

enum AVPixelFormat forcePixelFormat;

//重采样对象

struct SwsContext* swsContext;

//重采样缓存

uint8_t* swsBuffer;

//渲染器

SDL_Renderer* sdlRenderer;

//纹理

SDL_Texture* sdlTexture;

//窗口

SDL_Window* screen;

//窗口宽

int screen_w;

//窗口高

int screen_h;

//旋转角度

double angle;

//播放结束标记

int eofDisplay;

//播放开始标记

int sofDisplay;

}Video;

//音频模块

typedef struct {

//解码器

Decoder decoder;

//输出格式

enum AVSampleFormat forceSampleFormat;

//音频设备id

SDL_AudioDeviceID audioId;

//期望的音频设备参数

SDL_AudioSpec wantedSpec;

//实际的音频设备参数

SDL_AudioSpec spec;

//重采样对象

struct SwrContext* swrContext;

//重采样缓存

uint8_t* swrBuffer;

//播放队列

AVAudioFifo* playFifo;

//播放队列互斥锁

SDL_mutex* mutex;

//累积的待播放采样数

int accumulateSamples;

//音量

int volume;

//声音混合buffer

uint8_t* mixBuffer;

//播放结束标记

int eofPlay;

//播放开始标记

int sofPlay;

}Audio;

//播放器

typedef struct {

//视频url

char* url;

//解复用上下文

AVFormatContext* formatContext;

//包

AVPacket packet;

//是否正在读取包

int isAsyncReading;

//包读取结束标记

int eofPacket;

//视频模块

Video video;

//音频模块

Audio audio;

//时钟同步

Synchronize synchronize;

//延时结束时间

double wakeupTime;

//播放一帧

int step;

//是否暂停

int isPaused;

//是否循环

int isLoop;

//退出标记

int exitFlag;

//消息队列

MessageQueue mq;

}Play;

//播放消息类型

typedef enum {

//调用方法

PLAYMESSAGETYPE_INVOKE

}PlayMessageType;

//播放消息

typedef struct {

PlayMessageType type;

void* param1;

void* param2;

}PlayMessage;

//格式映射

static const struct TextureFormatEntry {

enum AVPixelFormat format;

int texture_fmt;

} sdl_texture_format_map[] = {

{ AV_PIX_FMT_RGB8, SDL_PIXELFORMAT_RGB332 },

{ AV_PIX_FMT_RGB444, SDL_PIXELFORMAT_RGB444 },

{ AV_PIX_FMT_RGB555, SDL_PIXELFORMAT_RGB555 },

{ AV_PIX_FMT_BGR555, SDL_PIXELFORMAT_BGR555 },

{ AV_PIX_FMT_RGB565, SDL_PIXELFORMAT_RGB565 },

{ AV_PIX_FMT_BGR565, SDL_PIXELFORMAT_BGR565 },

{ AV_PIX_FMT_RGB24, SDL_PIXELFORMAT_RGB24 },

{ AV_PIX_FMT_BGR24, SDL_PIXELFORMAT_BGR24 },

{ AV_PIX_FMT_0RGB32, SDL_PIXELFORMAT_RGB888 },

{ AV_PIX_FMT_0BGR32, SDL_PIXELFORMAT_BGR888 },

{ AV_PIX_FMT_NE(RGB0, 0BGR), SDL_PIXELFORMAT_RGBX8888 },

{ AV_PIX_FMT_NE(BGR0, 0RGB), SDL_PIXELFORMAT_BGRX8888 },

{ AV_PIX_FMT_RGB32, SDL_PIXELFORMAT_ARGB8888 },

{ AV_PIX_FMT_RGB32_1, SDL_PIXELFORMAT_RGBA8888 },

{ AV_PIX_FMT_BGR32, SDL_PIXELFORMAT_ABGR8888 },

{ AV_PIX_FMT_BGR32_1, SDL_PIXELFORMAT_BGRA8888 },

{ AV_PIX_FMT_YUV420P, SDL_PIXELFORMAT_IYUV },

{ AV_PIX_FMT_YUYV422, SDL_PIXELFORMAT_YUY2 },

{ AV_PIX_FMT_UYVY422, SDL_PIXELFORMAT_UYVY },

{ AV_PIX_FMT_NONE, SDL_PIXELFORMAT_UNKNOWN },

};

/// <summary>

/// 错误码

/// </summary>

typedef enum {

//无错误

ERRORCODE_NONE = 0,

//播放

ERRORCODE_PLAY_OPENINPUTSTREAMFAILED = -0xffff,//打开输入流失败

ERRORCODE_PLAY_VIDEOINITFAILED,//视频初始化失败

ERRORCODE_PLAY_AUDIOINITFAILED,//音频初始化失败

ERRORCODE_PLAY_LOOPERROR,//播放循环错误

ERRORCODE_PLAY_READPACKETERROR,//解包错误

ERRORCODE_PLAY_VIDEODECODEERROR,//视频解码错误

ERRORCODE_PLAY_AUDIODECODEERROR,//音频解码错误

ERRORCODE_PLAY_VIDEODISPLAYERROR,//视频播放错误

ERRORCODE_PLAY_AUDIOPLAYERROR,//音频播放错误

//解包

ERRORCODE_PACKET_CANNOTOPENINPUTSTREAM,//无法代码输入流

ERRORCODE_PACKET_CANNOTFINDSTREAMINFO,//查找不到流信息

ERRORCODE_PACKET_DIDNOTFINDDANYSTREAM,//找不到任何流

ERRORCODE_PACKET_READFRAMEFAILED,//读取包失败

//解码

ERRORCODE_DECODER_CANNOTALLOCATECONTEXT,//解码器上下文申请内存失败

ERRORCODE_DECODER_SETPARAMFAILED,//解码器上下文设置参数失败

ERRORCODE_DECODER_CANNOTFINDDECODER,//找不到解码器

ERRORCODE_DECODER_OPENFAILED,//打开解码器失败

ERRORCODE_DECODER_SENDPACKEDFAILED,//解码失败

ERRORCODE_DECODER_MISSINGASTREAMTODECODE,//缺少用于解码的流

//视频

ERRORCODE_VIDEO_DECODERINITFAILED,//音频解码器初始化失败

ERRORCODE_VIDEO_CANNOTGETSWSCONTEX,//无法获取ffmpeg swsContext

ERRORCODE_VIDEO_IMAGEFILLARRAYFAILED,//将图像数据映射到数组时失败:av_image_fill_arrays

ERRORCODE_VIDEO_CANNOTRESAMPLEAFRAME,//无法重采样视频帧

ERRORCODE_VIDEO_MISSINGSTREAM,//缺少视频流

//音频

ERRORCODE_AUDIO_DECODERINITFAILED,//音频解码器初始化失败

ERRORCODE_AUDIO_UNSUPORTDEVICESAMPLEFORMAT,//不支持音频设备采样格式

ERRORCODE_AUDIO_SAMPLESSIZEINVALID,//采样大小不合法

ERRORCODE_AUDIO_MISSINGSTREAM,//缺少音频流

ERRORCODE_AUDIO_SWRINITFAILED,//ffmpeg swr重采样对象初始化失败

ERRORCODE_AUDIO_CANNOTCONVERSAMPLE,//音频重采样失败

ERRORCODE_AUDIO_QUEUEISEMPTY,//队列数据为空

//帧

ERRORCODE_FRAME_ALLOCFAILED,//初始化帧失败

//队列

ERRORCODE_FIFO_ALLOCFAILED,//初始化队列失败

//sdl

ERRORCODE_SDL_INITFAILED,//sdl初始化失败

ERRORCODE_SDL_CANNOTCREATEMUTEX,//无法创建互斥锁

ERRORCODE_SDL_CANNOTOPENDEVICE, //无法打开音频设备

ERRORCODE_SDL_CREATEWINDOWFAILED,//创建窗口失败

ERRORCODE_SDL_CREATERENDERERFAILED,//创建渲染器失败

ERRORCODE_SDL_CREATETEXTUREFAILED,//创建纹理失败

//内存

ERRORCODE_MEMORY_ALLOCFAILED,//申请内存失败

ERRORCODE_MEMORY_LEAK,//内存泄漏

//参数

ERRORCODE_ARGUMENT_INVALID,//参数不合法

ERRORCODE_ARGUMENT_OUTOFRANGE,//超出范围

}ErrorCode;

/// <summary>

/// 日志等级

/// </summary>

typedef enum {

LOGLEVEL_NONE = 0,

LOGLEVEL_INFO = 1,

LOGLEVEL_DEBUG = 2,

LOGLEVEL_TRACE = 4,

LOGLEVEL_WARNNING = 8,

LOGLEVEL_ERROR = 16,

LOGLEVEL_ALL = LOGLEVEL_INFO | LOGLEVEL_DEBUG | LOGLEVEL_TRACE | LOGLEVEL_WARNNING | LOGLEVEL_ERROR

}

LogLevel;

//输出日志

#define LOGHELPERINTERNALLOG(message,level,...) aclog(__FILE__,__FUNCTION__,__LINE__,level,message,##__VA_ARGS__)

#define LOG_INFO(message,...) LOGHELPERINTERNALLOG(message,LOGLEVEL_INFO, ##__VA_ARGS__)

#define LOG_DEBUG(message,...) LOGHELPERINTERNALLOG(message,LOGLEVEL_DEBUG,##__VA_ARGS__)

#define LOG_TRACE(message,...) LOGHELPERINTERNALLOG(cmessage,LOGLEVEL_TRACE,##__VA_ARGS__)

#define LOG_WARNNING(message,...) LOGHELPERINTERNALLOG(message,LOGLEVEL_WARNNING,##__VA_ARGS__)

#define LOG_ERROR(message,...) LOGHELPERINTERNALLOG(message,LOGLEVEL_ERROR,##__VA_ARGS__)

static int logLevelFilter = LOGLEVEL_ALL;

static ThreadPool* _pool = NULL;

//写日志

void aclog(const char* fileName, const char* methodName, int line, LogLevel level, const char* message, ...) {

if ((logLevelFilter & level) == 0)

return;

char dateTime[32];

time_t tt = time(0);

struct tm* t;

va_list valist;

char buf[512];

char* pBuf = buf;

va_start(valist, message);

int size = vsnprintf(pBuf, sizeof(buf), message, valist);

if (size > sizeof(buf))

{

pBuf = (char*)av_malloc(size + 1);

vsnprintf(pBuf, size + 1, message, valist);

}

va_end(valist);

t = localtime(&tt);

sprintf(dateTime, "%04d-%02d-%02d %02d:%02d:%02d", t->tm_year + 1900, t->tm_mon + 1, t->tm_mday, t->tm_hour, t->tm_min, t->tm_sec);

//在此处可替换为写文件

printf("%s %d %d %s %s %d: %s\n", dateTime, level, SDL_ThreadID(), fileName, methodName, line, pBuf);

if (pBuf != buf)

av_free(pBuf);

}

//日志过滤,设为LOGLEVEL_NONE则不输出日志

void setLogFilter(LogLevel level) {

logLevelFilter = level;

}

//初始化消息队列

int messageQueue_init(MessageQueue* _this, int capacity, int elementSize) {

_this->_queue = av_fifo_alloc(elementSize * capacity);

if (!_this->_queue)

return ERRORCODE_MEMORY_ALLOCFAILED;

_this->_mtx = SDL_CreateMutex();

if (!_this->_mtx)

return ERRORCODE_MEMORY_ALLOCFAILED;

_this->_cv = SDL_CreateCond();

if (!_this->_cv)

return ERRORCODE_MEMORY_ALLOCFAILED;

_this->_capacity = capacity;

_this->_elementSize = elementSize;

return 0;

}

//反初始化消息队列

void messageQueue_deinit(MessageQueue* _this) {

if (_this->_queue)

av_fifo_free(_this->_queue);

if (_this->_cv)

SDL_DestroyCond(_this->_cv);

if (_this->_mtx)

SDL_DestroyMutex(_this->_mtx);

memset(_this, 0, sizeof(MessageQueue));

}

//推入消息

int messageQueue_push(MessageQueue* _this, void* msg) {

int ret = 0;

SDL_LockMutex(_this->_mtx);

ret = av_fifo_generic_write(_this->_queue, msg, _this->_elementSize, NULL);

SDL_CondSignal(_this->_cv);

SDL_UnlockMutex(_this->_mtx);

return ret > 0;

}

//轮询序消息

int messageQueue_poll(MessageQueue* _this, void* msg) {

SDL_LockMutex(_this->_mtx);

int size = av_fifo_size(_this->_queue);

if (size >= _this->_elementSize)

{